Build an Edge Data Center with High-performance Switches and Routers

written by Asterfuison

Table of Contents

Ⅰ. What is An Edge Data Center ?

Simply put, an edge data center is the facility closest to you. Unlike traditional cloud data centers, it is deployed at the network edge, near users and devices. It is a distributed resource. Often, it is much smaller. Sometimes, it is just a single rack.

Why do edge data centers exist? The main reason is data growth. With 5G, IoT devices, and the rise of AI and machine learning, data volumes are increasing exponentially. Sending all data to the core cloud is not feasible. Bandwidth is limited. Latency is too high.

Edge data centers solve the need for speed. They respond quickly. They process time-sensitive data. Latency is reduced to milliseconds. These centers are distributed. They are everywhere, like capillaries. They act as local connection points. Only key analytics results are sent back to headquarters. Edge centers do not handle everything. They use local caching. This enables real-time applications, such as autonomous driving and smart manufacturing.

Asterfusion switches, upgraded from 10G to 800G, together with its routers, can help you build a high-performance edge data center.

Ⅱ. Edge Data Center and Core Data Center: Interaction and Collaboration

Fundamentally, edge data centers are not isolated. They connect user IoT networks and centralized data centers.

How do edge and centralized data centers interact? Edge data centers are close to the requesting devices. They process requests, such as JavaScript files or HTML, near the source. Data that can be handled locally, like static content caching or simple AI inference, is processed and returned directly. This reduces response time.

If the edge data center cannot process the data, or if long-term storage is needed, the data is sent to the centralized data center. The centralized data center provides more cloud resources and centralized processing. It also connects multiple edge data centers. This reduces core cloud pressure and saves bandwidth costs.

For example, during HD live streaming or factory robot operations, data is first processed in a local micro data center (MDC). Data is consumed near the source. This reduces traffic and improves security, as sensitive data does not traverse the entire network. Ultimately, collected data is converted into insights, improving user experience and IT reliability.

Ⅲ. Deployment Preparation: Pitfall Guide

Planning to deploy an edge data center? Preparation is critical.

Start with strategic planning. Clarify business goals—reduce latency or save bandwidth. This determines your approach. Site selection is key. Ensure power, network, proximity to users, and consider climate, as cooling is a major issue. Design should be flexible and modular for future expansion. Security measures are essential—physical and network. Check compliance requirements.

In practice, there are many pitfalls. Many underestimate complexity. Edge nodes are numerous and systems are diverse, making management challenging. Ignoring scalability leads to problems when business grows, and retrofitting is costly. Latency is a core concern. Poor network design defeats the purpose. Security is a major risk. Distributed environments are vulnerable. Do not sacrifice cooling or power for cost savings—these are vital for stability. Do not skip testing. Poor pre-launch testing leads to issues later. Choose reliable vendors. Otherwise, maintenance problems are unpredictable.

Ⅳ. How Asterfusion Supports Edge Deployment

For edge data centers, you can use integrated rack solutions from vendors or deploy your own. Consider the following:

Physical side: Cabinets must be quiet and dustproof, with online UPS backup.

Server side: Servers should be short to fit shallow racks and support IPMI remote management. Otherwise, failures require onsite intervention.

Network side: Switches need at least 10Gbps uplinks.

Network OS must support automation, such as ZTP, for large-scale deployments. Protocols like BGP and EVPN-VXLAN are essential for network design.

Here, let’s discuss from several aspects why Asterfusion’s high-performance data center switches are suitable for edge data center scenarios.

Hardware: Supports 560ns low latency, nanosecond-level forwarding, ideal for AI inference. Compact 1U form factor offers 10G to 800G ports, high density, low power, solving space constraints. Uses Marvell Teralynx and Prestera (Falcon) chips, optimized for cloud-native and AI inference, handling high-throughput edge traffic.

Software: Pre-installed with Enterprise SONiC – AsterNOS. Supports RoCEv2 for lossless networking, ensuring zero packet loss—critical for edge computing. EVPN-VXLAN is standard for large Layer 2 interconnects and seamless cross-region migration. Spine-leaf architecture enables ECMP for multi-link load balancing and network resilience. MC-LAG supports high-reliability server connections.

BGP multi-homing and ECMP: Edge links may be less stable than core sites. BGP multi-homing and equal-cost multipath enable millisecond-level link switching and load balancing, ensuring edge services stay online.

Management and operations are crucial. Asterfusion supports zero-touch provisioning (ZTP). Plug in and connect—configuration is pulled automatically, no expert needed onsite. Streaming telemetry provides real-time traffic and port status reporting for remote monitoring. Unattended operation is enabled. Open APIs, such as RESTful API, integrate easily with K8s platforms. Ansible is supported, making this an optimal edge solution.

Ⅴ. Typical Topology and Technical Architecture

Finally, let’s take a look at the typical topology.

Typical edge topologies are streamlined. Deploy as a single pod or multi-pod layered architecture, depending on scale.

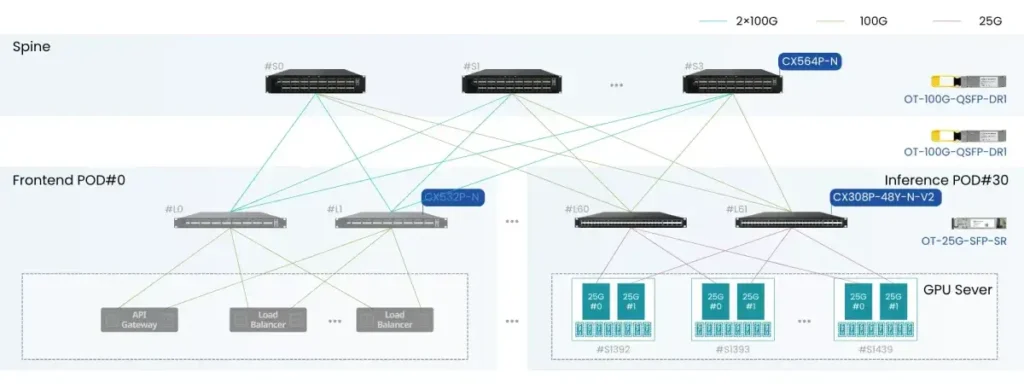

Leaf switches connect servers, storage, and IoT gateways. The Asterfusion CX308P-48Y-N-V2, as a ToR switch, supports high east-west traffic and rapid data transfer to spine switches for local processing.

Spine deployment uses CX564P-N,CX532P-N, and other 100G devices, plus 200G, 400G, and 800G models. Edge data centers connect to external networks or egress points. These switches offer high bandwidth and low latency, ensuring fast processing for edge IoT data.

Egress devices: Use ET2508 Open Gateway/Router to connect traffic to centralized data centers. IPsec and peer routers establish encrypted tunnels, securing sensitive data sent to the cloud.

Optical modules: Select multimode for short distances, single-mode for long distances.

This architecture is highly scalable. Adding devices is easy, supporting rapid edge business iteration. For ultra-small nodes, a single high-performance switch can handle all tasks.

Technically, spine-leaf architecture is used. Dual uplinks from servers to two switches, M-LAG, and Layer 3 ECMP ensure high reliability at multiple levels. Run BGP EVPN-VXLAN, establish EBGP neighbors network-wide, and use VXLAN for multi-tenant expansion.

Ⅵ. Conclusion

Edge data center deployment significantly improves data processing speed. With rapid IoT and 5G growth, edge data centers are key for data processing and storage, driving smart application adoption and enhancing network performance.

Looking ahead, innovation in intelligence, scalability, and sustainability will create more opportunities for enterprises.

If you need to optimize enterprise network performance, edge data center deployment is ideal for improving efficiency and user experience.

Asterfusion offers customized high-performance edge data center solutions, covering design, implementation, and ongoing technical support.

Contact US !

- To request a proposal, send an E-Mail to bd@cloudswit.ch

- To receive timely and relevant information from Asterfusion, sign up at AsterNOS Community Portal

- To submit a case, visit Support Portal

- To find user manuals for a specific command or scenario, access AsterNOS Documentation

- To find a product or product family, visit Asterfusion-cloudswit.ch