RoCEv2 AI Solution with NVIDIA DGX SuperPOD

Overview

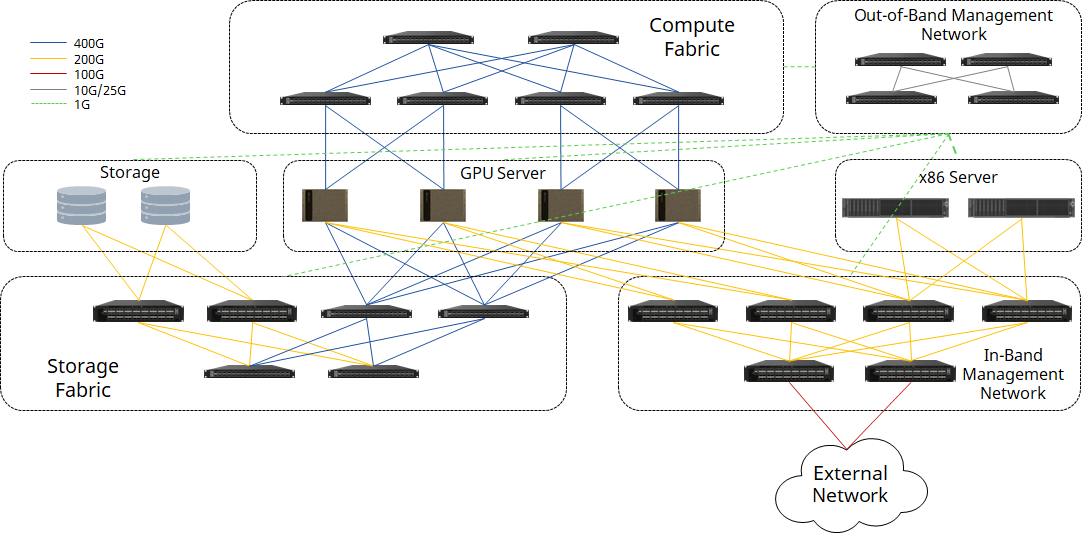

The NVIDIA DGX SuperPOD™ with NVIDIA DGX™ H100 systems is the cutting-edge data center architecture for AI. Asterfusion provides another approach of RoCEv2 enabled 100G-800G Ethernet switches to build a DGX H100 based superPOD instead of InfiniBand switches, including compute fabric, storage fabric, in-band and out-of-band management network.

Compute Fabric

For a research project or PoC, starting with a small-scale cluster is a good choice. Less than 4 nodes DGX H100 can be connected with one single 32x400G switch, using QSFP-DD transceivers on switch ports and OSFP transceivers on ConnectX-7 NIC of each GPU server, connecting by MPO-APC cables.

When the number of GPU servers increases to 8, a 2+4 CLOS fabric can be applied. Each leaf switch connects 2 of 8 ports of each GPU server, to archieve a rail-optimized connectivity to increase the efficiency of GPU resources. Spine and leaf switches can be connected through QSFP-DD transceivers with fiber, or QSFP-DD AOC/DAC/AEC cables.

Single CX864E-N (51.2Tbps Marvell Teralynx 10 based 64x800G switch, coming in 2024Q3) can hold even up to 16 GPU servers:

A scalable compute fabric is built upon modular blocks, which provides a rapid deployment of multiple scales. Each block contains a group of GPU servers and 400G switches (16 x DGX H100 systems with 8 x CX732Q-N in this example). The block is designed as rail-aligned and non-oversubscribed, to ensure the performance of the compute fabric.

A typical 64-node compute fabric design is to use Leaf-Spine architecture to hold 4 blocks:

To scale the compute fabric, higher throughput switches can be used to replace the Spine. CX864E-N can be placed here to expand the network to maximum 16 blocks of 256 nodes:

Table 1 shows the number of switches and cables required for the compute fabric of different scales.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||

Storage Fabric

Storage specifications vary by vendors’ products. In this case, we assume each node provides 45GB/s read performance, with 4x200G interfaces.

Here we assume each DGX H100 node requires 8GB/s read performance (reference as table 2 & 3, from NVIDIA document), which is 256GB/s in total (requires 6 storage nodes in this case).

MC-LAG is usually used to achieve redundancy, here we recommend EVPN Multi-Homing to reduce switch port requirements.

|

|

In-Band Management Network

The in-band network connects all the compute nodes and management nodes (general-purposed x86 servers), and provides connectivity for the in-cluster services and services outside of the cluster.

Out-of-Band Management Network

Asterfusion also offers 1G/10G/25G enterprise switches for OOB, which also support Leaf-Spine architecture to achieve management of all the components inside the superPOD.