Hardware-Offloaded BFD on SONiC: Robust Link Failure Detection

written by Asterfuison

Table of Contents

Introduction

As a network professional, you’re likely familiar with the “heartbeat” mechanisms used by routing protocols—OSPF sends Hello packets every 10 seconds by default, while BGP relies on keepalive messages to maintain neighbor relationships.

But if you think about it, is this “second-level” detection really sufficient for all scenarios?

BFD (Bidirectional Forwarding Detection) was created to fill this gap. It runs independently, sending lightweight probe packets for rapid, cross-protocol fault detection.

In large-scale networks with many links and sessions, traditional CPU-driven BFD can struggle. Hardware offloaded BFD on SONiC solves this by moving detection to the ASIC. Asterfusion’s Enterprise SONiC platforms adopt this design to deliver carrier-grade fault detection. How does it work, and why is it faster? Let’s take a look.

Ⅰ. What Is BFD

Before exploring hardware offloaded BFD, it’s helpful to understand BFD itself. Defined in RFC 5880, BFD is a lightweight protocol designed for fast fault detection, offering millisecond-level monitoring to quickly spot link, interface, or session failures.

The operation of BFD is straightforward. When two network devices—such as routers or switches—need to monitor their connectivity via BFD, they first establish a BFD session. Once the session is active, both sides periodically send BFD control packets at a negotiated interval, typically measured in milliseconds(e.g., 10ms, 20ms). Each device continuously monitors the packets received from its peer and uses a “detection multiplier” (e.g., 3×, 5×) to determine a failure threshold. If no packets are received within the interval multiplied by the detection multiplier, the connection (link or session) is considered down. The failure is then immediately reported to any dependent routing protocol (such as OSPF or BGP) or service module, triggering rapid route convergence or failover procedures.

BFD has several key characteristics:

Protocol independence – It works regardless of routing protocol (OSPF, BGP, IS-IS) or link type (Ethernet, PPP). Any reachable connection—physical or logical (VPN, GRE)—can be monitored, making BFD versatile in complex networks.

Lightweight design – BFD packets are very small, about 24 bytes, minimizing bandwidth usage.

Flexible detection intervals – Administrators can adjust transmit intervals and detection multipliers to balance speed and resource use.

These features allow BFD to overcome the slow “second-level” detection of traditional protocols.

As networks grow—like backbone networks running thousands of BFD sessions—the traditional CPU-driven model for sending, receiving, and maintaining sessions hits resource limits. Hardware offloaded BFD overcomes this bottleneck by moving detection to the ASIC.

Ⅱ. What Is Hardware Offloaded BFD on SONiC

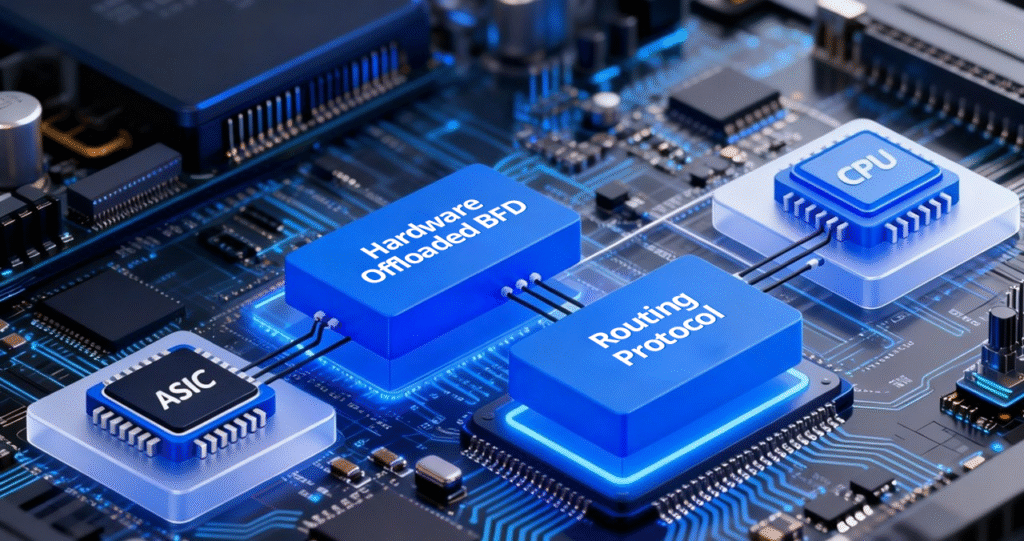

The key point to understand is that hardware offloaded BFD moves the detection process down to the ASIC, letting the hardware handle it instead of the CPU. This shift significantly reduces the CPU and memory load because the entity executing the detection is different.

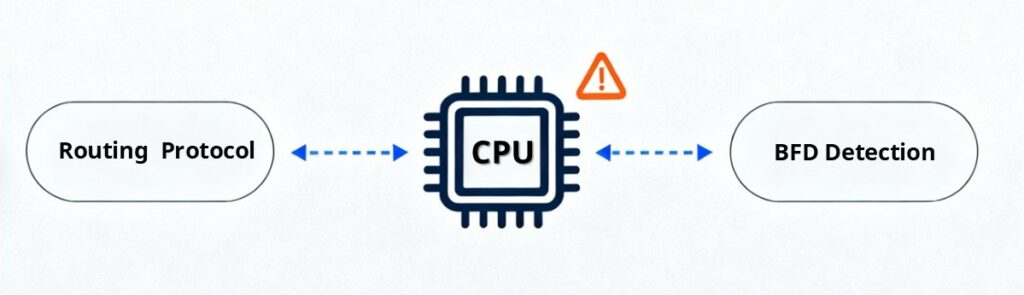

In traditional BFD, the CPU handles the full workflow: establishing sessions, sending and receiving control packets, checking for timeouts, and reporting failures to routing protocols. Each step consumes CPU resources.

Hardware offloaded BFD shifts these tasks to a dedicated ASIC. The ASIC handles packet transmission and reception in real time, tracks timeouts, and determines session state. The CPU is involved only for session setup or parameter changes, while daily detection runs entirely on the hardware.

This difference in processing responsibility leads to clear advantages in resource usage, detection performance, stability, and scalability.

Below is a table comparing traditional BFD with hardware offloaded BFD:

Comparison of Traditional BFD and Hardware Offloaded BFD on SONiC

| Comparison Dimension | Software BFD | Hardware BFD |

| Detection Interval | Typically ≥100ms (too low may cause false alarms) | Supports 3ms–50ms, suitable for low-latency networks |

| Jitter Tolerance | Easily affected by system scheduling and CPU load fluctuations | Barely affected by control plane load, detection is more stable |

| Maximum Session Count/Scalability | Limited by CPU and thread management, relatively small scale | Determined by hardware table entries, supports thousands of sessions for large-scale deployment |

| CPU Usage | Consumes main CPU resources; intensive detection significantly increases load | Offloaded to hardware; minimal CPU usage |

| False Alarm Risk | High; sessions may be misjudged as down when the system is busy | Low; determination is done by hardware, less affected by timing jitter |

| Debugging & Controllability | Flexible debugging and logging via software | Debug interface depends on chip SDK or drivers; more complex |

| Deployment Suitability | Suitable for small to medium networks or scenarios not sensitive to detection interval | Suitable for large-scale, low-latency scenarios such as backbone, IDC, or financial networks |

In practice, Asterfusion’s hardware-offloaded BFD solution on SONiC enables operators to scale to thousands of sessions while maintaining stable millisecond detection—something traditional CPU-based designs cannot achieve.

Ⅲ. How Does Hardware Offloaded BFD Work with Routing Protocol

Asterfusion currently supports integration of hardware offloaded BFD on SONiC with BGP, OSPF, IS-IS, and VRRP. Next, let’s explore how it works through the detection mechanism, workflow, session establishment, and fault handling.

Detection Mechanism

BFD detects link availability by periodically exchanging control packets between two devices. The BFD state machine has four states: Down, Init, Up, and AdminDown.

Key aspects of the detection mechanism:

- Timer Mechanism: Devices send BFD packets at negotiated intervals, e.g., every 300ms.

- Bidirectional Detection: Both ends simultaneously transmit and receive packets to ensure two-way connectivity.

- Detect Multiplier: If N consecutive packets are lost (e.g., 3), the peer is considered down.

- Fast Response: Link failures can be detected within milliseconds, significantly faster than traditional routing protocol Hello timers.

Once a BFD session transitions to the Down state, it immediately notifies the associated upper-layer protocol (such as BGP or OSPF), triggering fast routing convergence or link failover.

Workflow

Before we look at session establishment in detail, let’s first understand the overall workflow of hardware offloaded BFD, which mainly consists of the following steps:

BFD Session Configuration Deployment The control plane first sets up the BFD session and programs parameters—detection interval, transmit and receive intervals, and remote IP—into the forwarding ASIC via the SDK.

Hardware Periodic Packet Transmission The ASIC, according to its hardware logic, periodically sends BFD control packets (typically encapsulated in UDP). These packets are transmitted directly from the data plane, bypassing the main CPU entirely.

Hardware Packet Reception and Processing Upon receiving BFD packets from the peer, the local ASIC processes them in hardware, determines the session state (e.g., Up, Down, Init), and updates the local BFD session status.

Detection Result Reporting to the Control Plane If a link anomaly is detected, or packets are not received within the timeout period, the forwarding ASIC reports the BFD state change to the control plane, which may trigger routing failover.

Fast Routing Convergence Routing protocols such as OSPF, BGP, IS-IS, and VRRP immediately react to the state change event, performing rapid convergence or link switchover.

Session Establishment

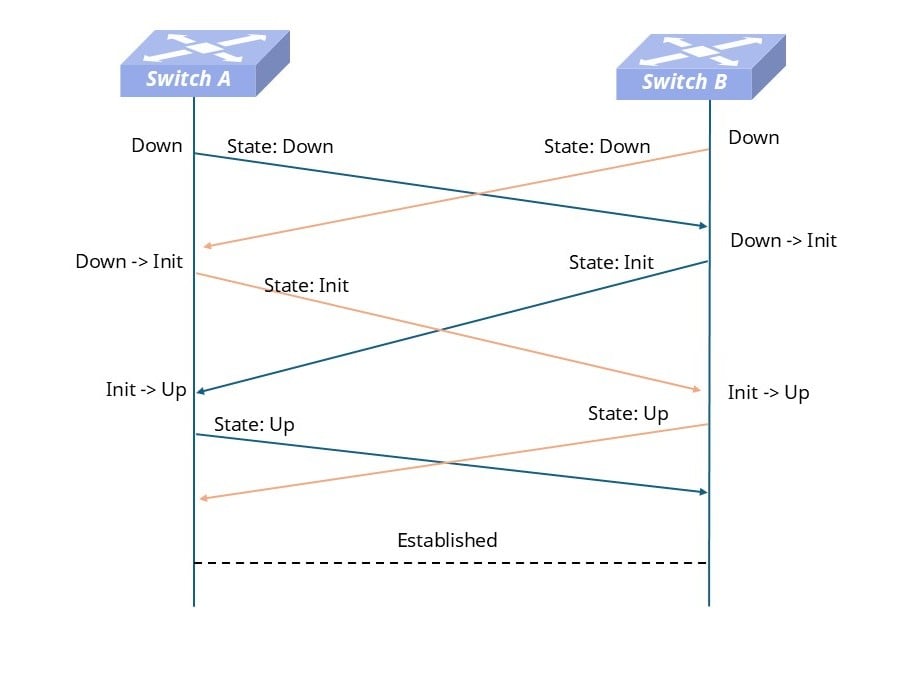

As shown in the figure below, when a BFD session is to be established between SwitchA and SwitchB, it goes through the following steps:

Flowchart of BFD Session Establishment

Initialization Phase Both devices configure BFD sessions, with the initial state set to Down. Each side periodically sends BFD control packets (UDP ports 3784/3785).

State Transition: Down → Init When one side (B) receives a State=Down packet from the peer (A), it indicates that the peer is running BFD. B then transitions to the Init state and sends a State=Init packet.

State Transition: Init → Up When B receives A’s State=Init packet, and simultaneously A receives B’s State=Init packet, both sides confirm that the peer is reachable. The state machine transitions to Up, completing session establishment and starting normal detection.

State Transition: Up → Down If packets from the peer are not received within the agreed interval (e.g., consecutive packet loss exceeds the Detect Multiplier), the session reverts to the Down state, and the upper-layer protocol is notified of link failure.

Fault Handling

When a device receives a BFD fault notification, how is the fault handled?

The following example illustrates the BFD fault handling mechanism using the interaction between BGP and BFD.

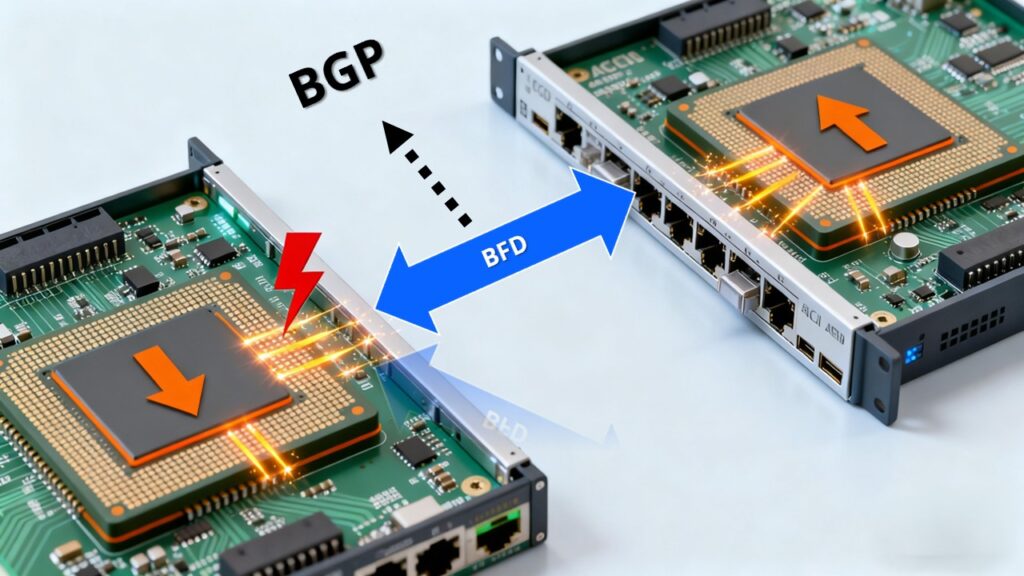

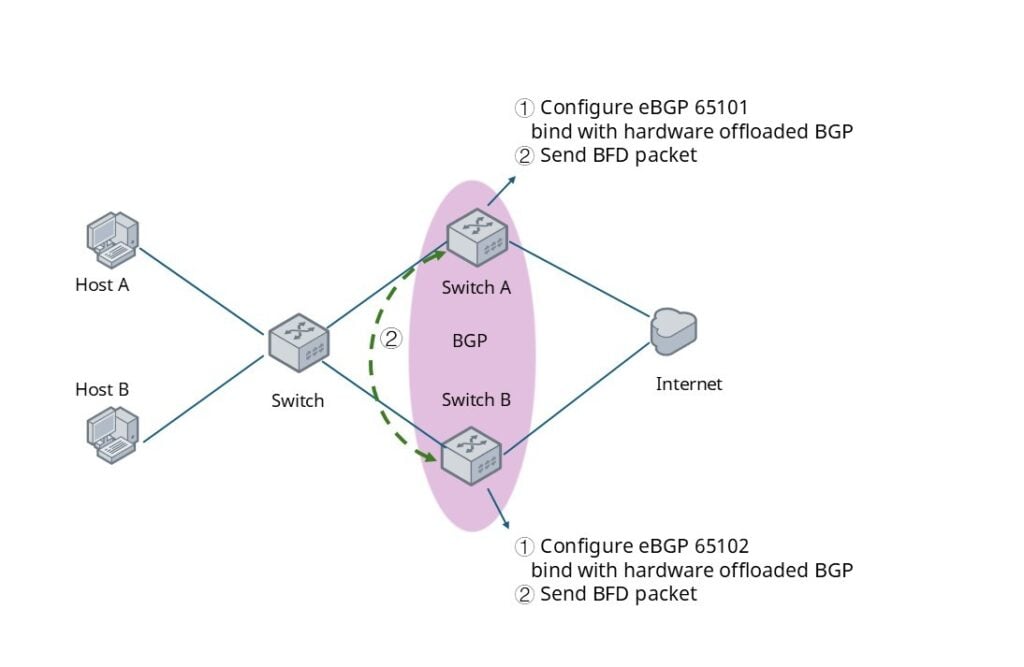

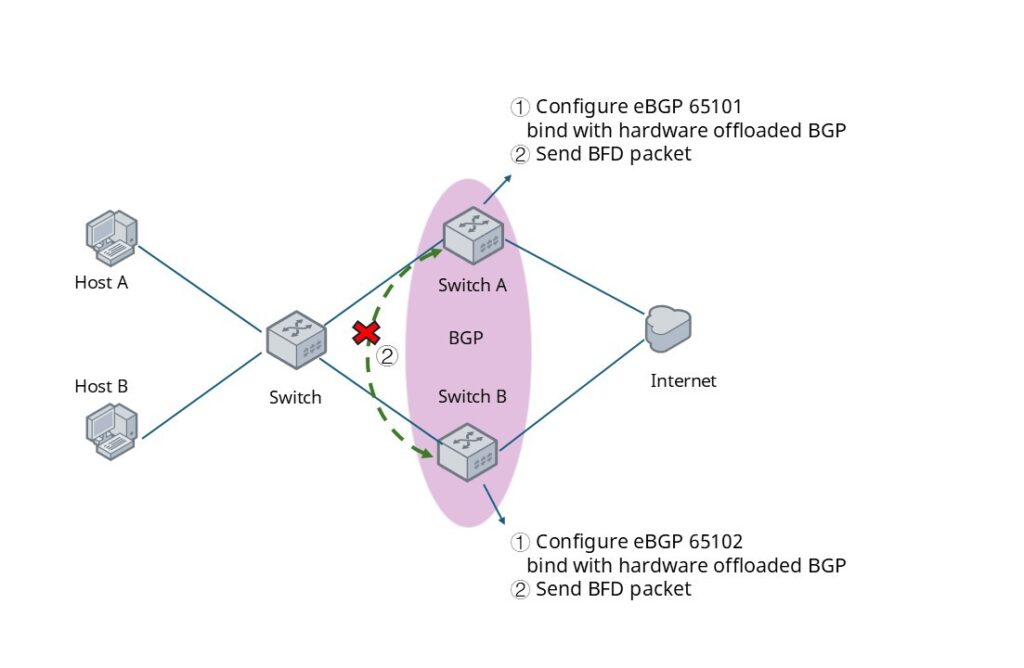

As shown in the figure, a eBGP neighbor relationship is established between Switch A and Switch B, with hardware offloaded BFD on SONiC enabled. Under normal conditions, both devices periodically exchange BFD control packets according to the negotiated transmit interval (default value: 3 × 300ms).

At a certain moment, if the physical link between Switch A and Switch B fails or a device goes down, causing Switch B to stop receiving packets from Switch A, the BFD session will transition to Down after three consecutive detection timeouts. This, in turn, triggers the linked BGP session to go Down and initiates route convergence.

Ⅳ. Typical Use Cases of Hardware Offloaded BFD on SONiC

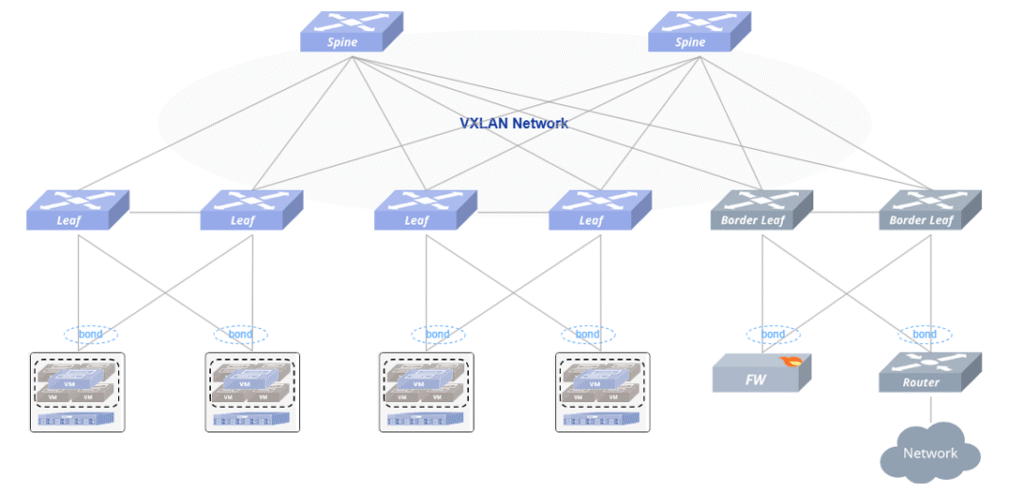

The figure below illustrates a typical cloud computing network scenario. It adopts an EVPN MC-LAG dual-homing active-active VXLAN distributed gateway architecture, combining the advantages of EVPN and MC-LAG technologies. This design provides high reliability in access scenarios, enables virtualization and multi-tenant networks, supports independent upgrades and flexible scaling, and optimizes cloud service resource allocation and traffic management.

Typical Cloud Network Topology Diagram

In high-availability scenarios, fast convergence mechanisms during network topology changes or failures are critical for service continuity. Users can improve fault convergence speed through the following configuration approaches:

- Spine-to-Leaf BGP Sessions Establish BGP sessions between Spine and Leaf switches for route exchange. BGP can be linked with hardware offloaded BFD sessions to achieve rapid fault detection and fast route convergence.

- Border Leaf to Firewall Connections These links typically use static routes. Compared with track-based linkage (convergence usually in seconds), linking static routes with software BFD sessions can significantly improve fault convergence speed.

- Border Leaf to Border Router Connections Dynamic routing updates are usually achieved via BGP sessions. In simpler scenarios, static routes can also be used. In both cases, BFD sessions can be linked to accelerate fault detection and route convergence.

👉 Typical deployment models: Asterfusion CX732Q-N (Spine), CX308P-48Y-N-V2 (Leaf) and other SKUs.

Ⅴ. Conclusion

After reading the above, you should now understand the value of hardware offloaded BFD on Asterfusion enterprise SONiC. Especially in cloud computing and carrier backbone networks, the number of links and neighbor sessions grows exponentially. Relying only on software BFD can create resource bottlenecks and unpredictability.

Hardware offloaded BFD on SONiC moves detection to the ASIC, handling packet processing and session state in hardware. This reduces CPU load, allows shorter detection intervals, improves stability, and scales better.

Asterfusion’s data center switches support hardware BFD linked with BGP, OSPF, IS-IS, and VRRP. In large-scale ECMP and Spine-Leaf networks, they detect link failures within milliseconds while keeping CPU resources free for upper-layer protocols and applications.

For next-generation cloud or backbone networks, using switches and Asterfusion Enterprise SONiC with hardware BFD accelerates convergence and ensures service continuity, though actual performance depends on ASIC implementation and network scale.

For more details, visit the Asterfusion Data Center Product Page.