Table of Contents

Imagine an AI data center as a giant, ultra-efficient brain. To function properly, every neuron (GPU, TPU, CPU) must fire in perfect coordination. The secret behind this harmony? The network.

What is AI Networking?

AI Networking is the high-performance nervous system of AI workloads—purpose-built to handle massive data movement with ultra-low latency, high throughput, and lossless reliability. It’s not designed for web browsing or email servers, but for feeding and synchronizing thousands of hungry accelerators working in parallel to train, infer, serve, and refine large AI models like GPT-4. Whether it’s the intense data exchange during training or the high-speed responsiveness required for real-time inference, AI Networking ensures the entire AI pipeline runs efficiently and at scale.

Why Do We Need AI Networking?

AI workloads are growing at a breakneck pace, and they’re pushing the limits of traditional data center networks. Today’s massive models—think GPT-4 with trillions of parameters—are far too large for any single GPU. Training them requires thousands of GPUs working in sync, generating hundreds of terabytes of data traffic every day. On top of that, training datasets can span tens of terabytes (like the 38.5TB C4 dataset), while real-time inference has to handle high volumes of requests without delay. To make it all work, AI systems rely on complex parallel strategies—data parallelism, tensor parallelism, pipeline parallelism, even 3D parallelism—all of which place enormous demands on the network. That’s why modern AI networking isn’t just helpful—it’s absolutely essential.

Curious about data parallelism, tensor parallelism, pipeline parallelism, or even 3D parallelism? Check out this article: Unveiling AI Data Center Network Traffic.

Limitations of Traditional Networks

Traditional data center networks are built for general-purpose tasks like web hosting and databases, with characteristics like:

- North-South Traffic: User requests flow in from the outside and responses flow back out.

- Tolerant Latency: A few milliseconds of delay are acceptable.

- Simple Architecture: A two-tier Clos structure (leaf-spine) handles most workloads.

AI networking, however, is a different beast:

- East-West Traffic: Intense “chatter” between GPUs (e.g., AllReduce, AllGather) dominates, with bursty and dense data flows.

- Ultra-Low Latency: Microsecond-level delays are critical; any lag wastes GPU resources.

- Zero Tolerance for Loss: A single dropped packet can stall thousands of GPUs, disrupting tasks.

The Critical Role of AI Networking

AI networking is no longer just a “connection tool” — it has become a core part of AI systems, often referred to as the “second brain.” Its performance directly affects training speed, GPU utilization, and the overall cost and energy efficiency of a data center. A high-performance network can significantly accelerate model training, prevent GPUs from idling while waiting for data, and optimize resource usage. In today’s AI race, the strength of your network can be a decisive factor — enabling faster model iteration and quicker time-to-market, ultimately giving you a competitive edge.

| Feature | Traditional Data Center Networking | AI Networking |

| Primary Use | Web, databases, storage | AI training, inference, data processing |

| Traffic Pattern | North-South (user-server) | East-West (GPU-GPU, GPU-storage) |

| Latency Needs | Microseconds, relatively relaxed | Nanoseconds, extremely strict |

| Hardware | CPU servers + some GPUs | Thousands of GPUs/TPUs |

| Reliability | Tolerates minor packet loss | Zero packet loss, lossless network |

In a nutshell analogy: traditional networking is like city roads for daily traffic, while AI networking is like an F1 racetrack built for speed, precision, and zero errors.

Understanding AI Networks via Traffic Models

Next, I’ll explain AI data center traffic in a simple and easy-to-understand way—so even networking beginners can get it. If you’re looking for the more technical and serious version, check this out: Unveiling AI Data Center Network Traffic

To truly grasp why AI networks are so different, let’s step into the AI kitchen and see how it operates.

A data center is like a bustling “AI kitchen” cooking up “language feasts” (like ChatGPT). This kitchen has three main tasks: training (teaching AI to cook), inference (serving dishes to customers), and storage (managing ingredients and recipes). Each task relies on a “delivery guy” (the network) to move stuff quickly, accurately, and without losing anything! Below, I’ll explain these three tasks in plain, professional language and how the network helps out.

🍳 AI Training: Teaching the AI to Cook

What’s training?

Training is like teaching a clueless robot chef (AI model) to whip up a feast, like making ChatGPT chat. At first, the chef has a blank “recipe book” (model parameters). You give them a ton of “ingredients” (data) and show them how to cook, step by step.

What happens?

- Gather ingredients: Grab a huge pile of data (like the C4 dataset, 38.5TB—enough for millions of movies), including articles, images, and videos.

- Practice cooking: Have the chef read something like “It’s nice today” and guess the next sentence (forward propagation). If they mess up, correct them (calculate loss) and tweak the “seasoning” (backpropagation, updating parameters).

- Keep practicing: Run multiple rounds (epochs), switching up ingredients each time until the chef can cook a great meal.

- Save the recipe: Regularly store the “recipe” (checkpoints, about 560GB) to avoid forgetting how to cook.

Real-life analogy: It’s like teaching a kid to recite poems. Give them a bunch of poems (data), teach them line by line, correct mistakes, and have them memorize it. Training is the process of turning AI from clueless to chatty—it’s time-consuming and intense!

Network needs: Super busy group-chat delivery

During training, hundreds or thousands of GPU chefs “join a group chat” (AllReduce/AllGather) to say, “I’ve got the sauce right!” (syncing gradients and results). They also move 308TB of ingredients (8 GPUs each handling a chunk of the C4 dataset) and save 560GB recipes. The network needs to be like a “super high-speed train”:

- Super fast: Deliver 100GB of data 1,000 times a second, with 20 -unit Asterfusion 51.2T switches running for 20 days.

- No delays: Nanoseconds speed—any lag halts the chefs.

- No lost packages: Zero packet loss (using ROCE/ ECN/PFC tech)—one lost package messes up the whole kitchen.

- Full coverage: Fat-Tree topology keeps the “group chat” flowing smoothly.

- Smart navigation: INT-based smart routing avoids “traffic jams.”

🍽️ AI Inference: Serving Dishes to the Users

What’s inference?

Inference is when the AI, now a trained chef, serves dishes to customers (users). For example, you ask ChatGPT, “Will it rain tomorrow?” and it quickly “cooks” an answer, with the recipe (model) already memorized.

What happens?

- Take the order: Read the customer’s request (input, like “Write a poem”) and jot down “order notes” (KV cache).

- Cook and serve: Generate the answer word by word (autoregressive generation), serving it up fast.

- Handle multiple orders: Manage 100 customers ordering at once (high concurrency), like a delivery guy staying calm under pressure.

Real-life analogy:

It’s like a restaurant where the chef knows how to make braised pork. When a customer orders, they whip it up and serve it fast. Inference is putting AI’s “cooking skills” to work—quick, accurate, and sharp!

Network needs: Lightning-fast delivery

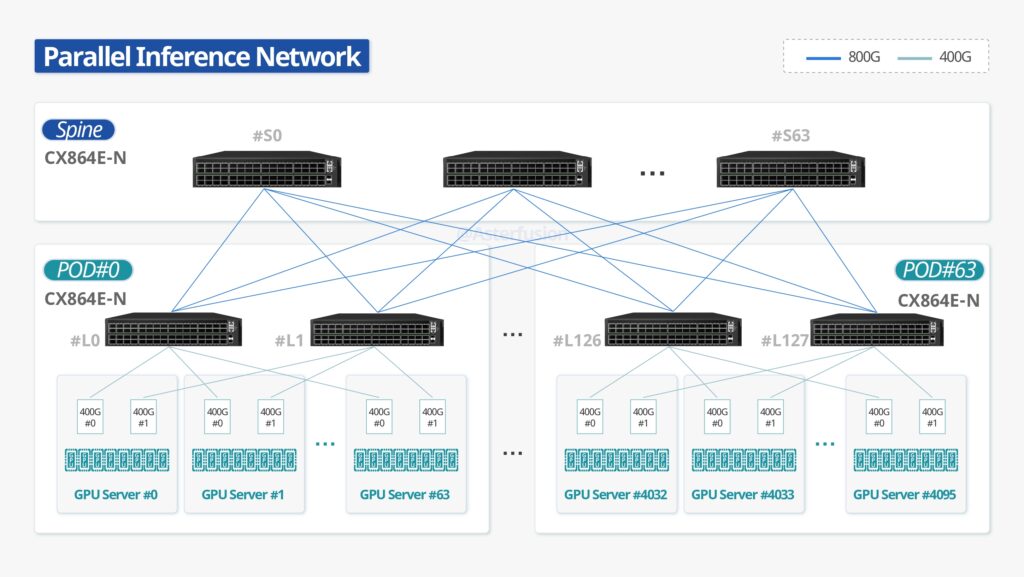

During inference, GPUs “direct message” (point-to-point) to pass notes and results. For 100 customers, that’s 21.9TB of traffic, with each customer’s notes (1.17GB) needing delivery in 10 milliseconds (44.4Tbps throughput). The network needs to be like “lightning-fast delivery”:

- Super fast: Deliver one word in 1 millisecond (800Gbps links, RoCEv2 protocol), with one Asterfusion 51.2T switch serving 115 people.

- Steady: Low jitter, like delivering food without spilling soup.

- Priority delivery: Smart QoS acts like a “VIP lane” for urgent orders.

- Smooth roads: Clos topology handles multiple orders without clogging.

- No lost orders: Zero packet loss—losing one makes customers mad.

Want to learn more about Asterfusion’s AI inference deployment? Check out this case study:

Case Study | Paratera × Asterfusion: Building a Future-Proof AI Inference Network

🧊 AI Storage: Managing the Warehouse

What’s storage?

Storage is the AI kitchen’s “big warehouse,” handling “ingredients” (data) and “recipes” (model parameters). Both training and inference need to grab or store stuff here, and the warehouse must stay organized.

What happens?

- Store ingredients: Keep massive datasets (C4, 38.5TB) for training and save user questions/answers during inference.

- Store recipes: Save model parameters (e.g., Llama3-70B with 70 billion parameters, checkpoints at 560GB) for training backups and inference loading.

- Move stuff around: Training mostly grabs ingredients (read-heavy), while inference saves results (write-heavy).

Real-life analogy:

It’s like your fridge at home, storing meat and veggies (data). You grab ingredients to cook and store leftovers. Storage keeps AI’s “ingredients” and “recipes” in order—no mess allowed!

Network needs: Steady heavy-duty delivery

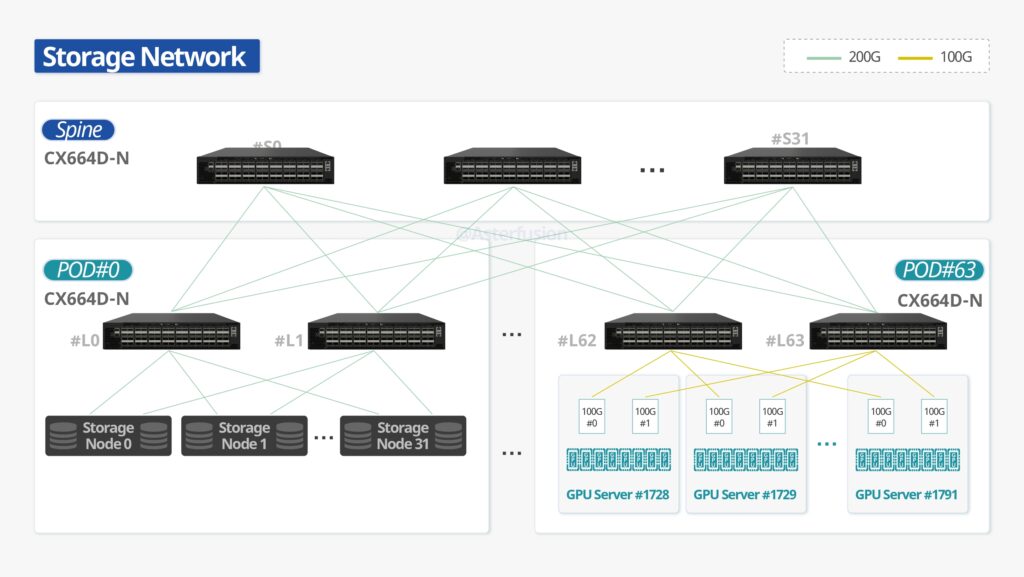

Storage moves 308TB of ingredients (8-GPU tensor parallelism) and saves 560GB recipes, with low frequency but zero tolerance for loss. The network needs to be like “cold-chain shipping”:

- Big capacity: 25.6Tbps (Asterfusion 51.2T partial ports) to move TB-scale ingredients.

- Fast access: NVMe-oF protocol for direct delivery.

- No lost goods: PFC prevents packet loss—losing data makes AI “forget.”

- Freight network: Dragonfly topology suits fetching and storing.

- Always on: High-availability clusters keep the warehouse running 24/7.

Contrast of AI Training /Inference/Storage

| Task | AI Training | AI Inference | AI Storage |

| Purpose | Teach AI models by feeding data & adjusting parameters | Use trained models to respond to user input | Store training data and model parameters |

| Analogy | Teaching a chef to cook | Chef serving food to customers | Warehouse storing ingredients and recipes |

| Data Volume | 308TB+ per session | 21.9TB for 100 users | Up to hundreds of TBs |

| Network Pattern | Group communication (AllReduce/AllGather) | Point-to-point message passing | Heavy read/write access |

| Network Requirements | High throughput, ultra-low latency, zero packet loss | Fast response, low jitter, prioritized traffic | High availability, lossless transfer,fast access |

Seven Key Requirements for AI Networking

The AI era is redefining what we need from traditional data center networks. From training trillion-parameter models to serving real-time inference at scale, here’s what it takes:

1. Ultra-High Throughput

- Handle TB/PB-scale data transfers for tasks like parameter synchronization and gradient aggregation.

- Require 400Gbps/800Gbps links and non-blocking backbone networks.

- Example: Training GPT-4 may generate hundreds of TB of data daily.

2. Ultra-Low Latency

- Minimize transfer delays to reduce GPU idle time and shorten job completion times (JCT).

- Demand nanoseconds latency and low jitter for stable performance.

- Example: AllReduce operations need nanoseconds delays to keep GPUs busy.

3. Lossless Networking

- Prevent retransmissions that disrupt collective communications.

- Use technologies like ECN, PFC, HQoS, and RoCE to eliminate packet loss.

- Example: A single dropped packet can halt thousands of GPUs, causing significant losses.

4. Network Monitoring & Telemetry

- Real-time visibility is critical, support In-band Telemetry (INT) provides hop-by-hop network insights without extra probe traffic.

- Tools like Prometheus + Grafana are widely used for monitoring GPU utilization, bottlenecks, and cluster health.

- Grafana’s dashboards make AI network health both visible and actionable.

5. Smarter Routing– In Band Network Telemetry Based Routing

- Leverage real-time telemetry to dynamically adjust routing paths based on current traffic conditions and congestion levels.

- Enable ultra-efficient, low-latency communication tailored for AI workloads.

- Example: INT collects real-time network “traffic” info and reroutes packets through the fastest, congestion-free paths.

6. Linear Scalability

- Scale from tens to tens of thousands of GPUs without performance degradation.

- Use topologies like Fat-Tree or Dragonfly to support massive clusters.

- Example: A 10,000-GPU cluster needs a network like an “infinite highway.”

7. Interoperability and Openness

- Support multiple protocols (Ethernet,Ultra Ethernet, RoCE, TCP/IP) and vendor equipment.

- Enable open, heterogeneous AI platforms.

- Example: Like a universal adapter, it works with any device.

Network Architecture for AI Data Centers: InfiniBand and Ethernet with RoCE

When it comes to RoCE and InfiniBand technology research, Asterfusion is truly an expert. We’ve published numerous in-depth articles and even tested our own AI switches alongside InfiniBand to draw meaningful conclusions. If you’re looking for the most comprehensive technical comparison between RoCE and IB available online, don’t miss our two-part series — it’s been shared over 700 times:

| RoCEv2 | IB | |||

| Features | Score | Features | Score | |

| Physic Layer | Fiber/Copper QSFP/OSFP PAM4 64/66b | ★★★★★ | Fiber/Copper QSFP/OSFP NRZ 64/66b | ★★★★☆ |

| Link Layer | Ethernet PFC ETS | ★★★★☆ | IB Link Layer CREDIT-based Flow Control SL + VL | ★★★★★ |

| Network Layer | IPv4/IPv6/SRv6 BGP/OSPF | ★★★★★ | IB Network Layer Subnet Manager | ★★★★☆ |

| Transport Layer | IB Transport Layer | ★★★★★ | IB Transport Layer | ★★★★★ |

| Congestion Control | PFC ECN DCQCN | ★★★★☆ | CREDIT-based Flow Control ECN Vendor-specific Algorism | ★★★★☆ |

| QoS | ETS DSCP | ★★★★★ | SL + VL Traffic Class | ★★★★☆ |

| ECMP | Hash-based Load balance Round-robin QP aware | ★★★★★ | Hash-based Load balance Round-robin | ★★★★★ |

- RoCE Or InfiniBand ?The Most Comprehensive Technical Comparison

- RoCE Or InfiniBand ?The Most Comprehensive Technical Comparison (II)

And if you’re interested in real-world results, this test report is one of the most compelling case studies on how RoCE performs in AI inference networks—definitely worth a read:

👉 RoCE Beats InfiniBand: Asterfusion 800G Switch Test Report