Asterfusion Ultra -low latency Switch- Ceph Cluster Deployment & OpenStack Integration

written by Asterfuison

Table of Contents

Ceph is a very popular and widely used SDS (Software Defined Storage) solution, which can provide object, block and file-level storage at the same time. This article will show you how to use Asterfusion CX-N series ultra low latency switches to form a network to carry Ceph storage clusters and use Ceph as the storage backend to integrate with Openstack.

Before the popularization of cloud technology, common storage solutions in the IT industry were DAS, SAN, and NAS. Currently, the three traditional storage solutions have some problems in terms of price, performance, scalability, deployment, operation and maintenance in their respective application scenarios. So, there is no one-size-fits-all solution on storage. In actual production environments, we often need to make trade-offs based on data size, performance requirements, budget, and application scenarios. The emergence of Ceph is a compromise solution to the above problems.

What is Ceph ?

Ceph is a very popular open source distributed storage system, with high scalability, high performance, high reliability and other merits. In the storage field, Ceph has penetrated into various industries such as operators, finance, education, electricity, large enterprises, Internet and other industries. Although compared to traditional array storage, Ceph has many advantages such as “unlimited” capacity expansion, performance increases linearly with capacity, low construction cost, and strong manageability. Large-scale distributed storage clusters have two outstanding problems, high IO latency and weak data consistency.

Ceph Storage Cluster Deployment –Take Asterfusion CX-N Low Latency Switches as Example

In this article, we use Asterfusion CX-N series ultra-low-latency switches to set up the network and deploy a 3-node storage cluster, the specific model is CX308P-48Y-N. The 1U switch has 48 ×25G/10G FP28 and 8 ×100GE/40GE QSFP28 ports with a switching capacity of up to 2.0Tbps.

With the ultra-low latency capability of Asterfusion CX-N series switches, distributed storage clusters can be significantly improved on performance such as latency and IOPS.

About Asterfusion CX-N Ultra-Low Latency Switches

CX-N series ultra-low latency switches self- developed by Asterfusion for data center networks, which can provide high-performance network services for multi-application scenarios such as computing cluster, storage cluster, big data analysis, high frequency trading and cloud OS integration and other scenarios in cloud data centers.

01 Storage Cluster Component Introduction

The Ceph stable version of Nautilus 14.2.9, each component provides the following features:

- MON: Controller services, mainly maintains various MAP in the cluster and also provides security authentication services, is a necessary component.

- OSD: Object storage service, responsible for data replication, recovery, backfill and rebalancing, is a necessary component.

- MGR: Cluster monitoring service, responsible for monitoring Indicators of whole cluster and provides external interfaces, is a necessary component.

- MDS: Metadata service, storage and maintenance of metadata for CFS, deployed only when using CFS, is a non-essential component.

- RGW: Object Storage Gateway, provides RESTful access interfaces, compatible with S3 and Swift, is a non-essential component.

Deploying a minimum Ceph cluster must contain one MON, one MGR, and two OSDs, otherwise the entire cluster is unhealthy. Because Monitor’s Leader Elect mechanism requires that the MON in the cluster be preferably odd, three Mons are officially recommended for high availability in a production environment. The two OSD nodes meet the dual-copy mechanism to ensure data security.

02 Environment Declaration and Preparations for Deployment

(1) Server system and cluster version:

- Ceph: Stable version of Nautilus 14.2.9;

- Operating system: Red Hat Enterprise Linux Server 7.6 (Maipo)

(2) Node configuration and network architecture

The above table shows the configuration information about the three nodes in the cluster. The performance of the Ceph cluster can be significantly improved by using two separate networks, the Business Network (Front End) and the Storage Network (Back End), as officially recommended.

Therefore, each node must be equipped with multiple physical NICs (a minimum of two physical NICs)

(3) Network environment preparation before deployment

Use the 25G fiber module to connect the server to the CX-N ultra-low-latency switch used by the business network, storage network, according to the topology in the figure above.

For this authentication scheme, two two-tier networks are required, no additional configuration operations are required after the switch is powered on, only to confirm that each port is UP, and the rate negotiation is normal after connecting the switch.

(4) System environment preparation before deployment

You need to operate on each node, and all of the following configuration steps use node-01 as an example. Includes disabling SELinux, disabling firewalls, configuring IP addresses, configuring host names, and configuring domestic YUM sources.

03 Installation steps

First, Ceph packages and its dependencies need to be installed on all nodes in the cluster. Detailed installation steps include single-node deployment, expansion of OSD nodes, expansion of MON nodes, configuration of MGR services, and configuration of MDS services.

04 Commonly Used Monitoring Solutions

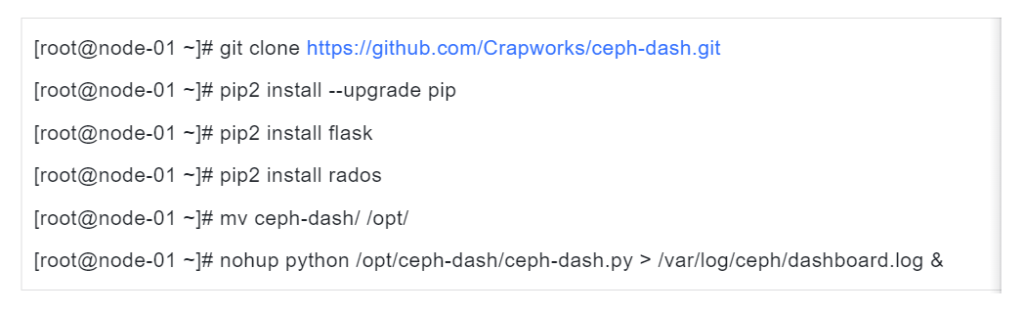

Install the monitoring solution Cceph-Dash on the configured Ceph storage cluster.

(1)Ceph-Dash

This monitoring solution is an open source project, developed using the Python Flask framework, with a clean, simple, clean interface and a very comfortable style .

Although the content presented is not that much, only the output of the ceph status command is visualized, the simple development framework and interface structure make it easier for secondary development and learn. Select a MON node or deploy at all MON nodes:

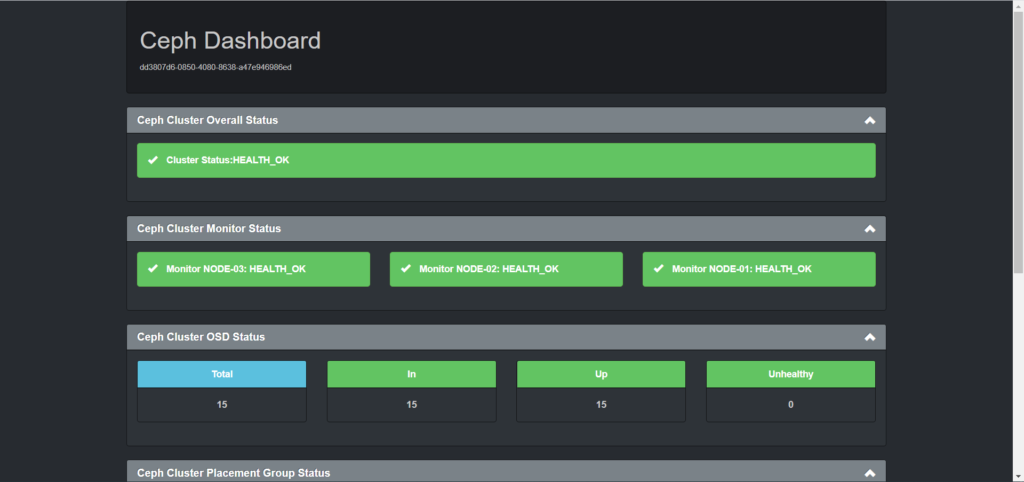

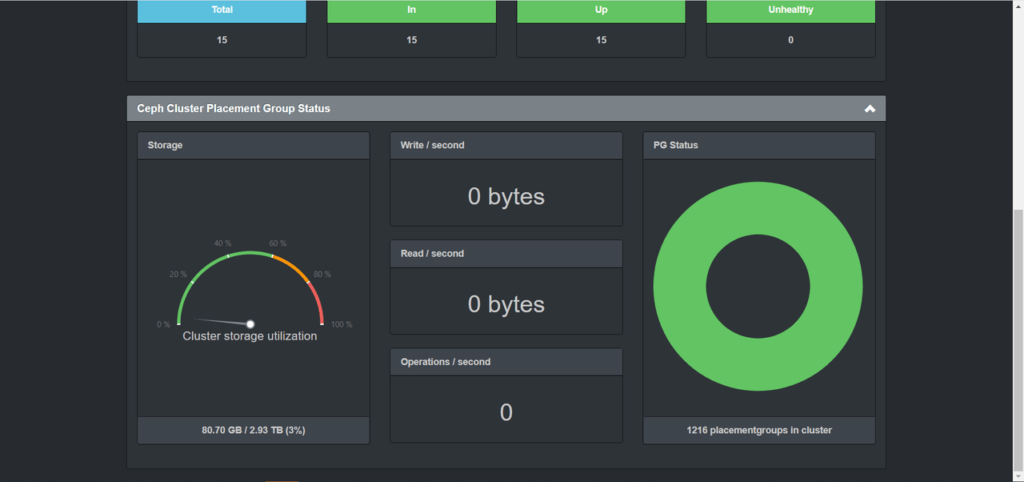

After successful startup, the monitoring interface is as follows:

(2)MGR Dashboard

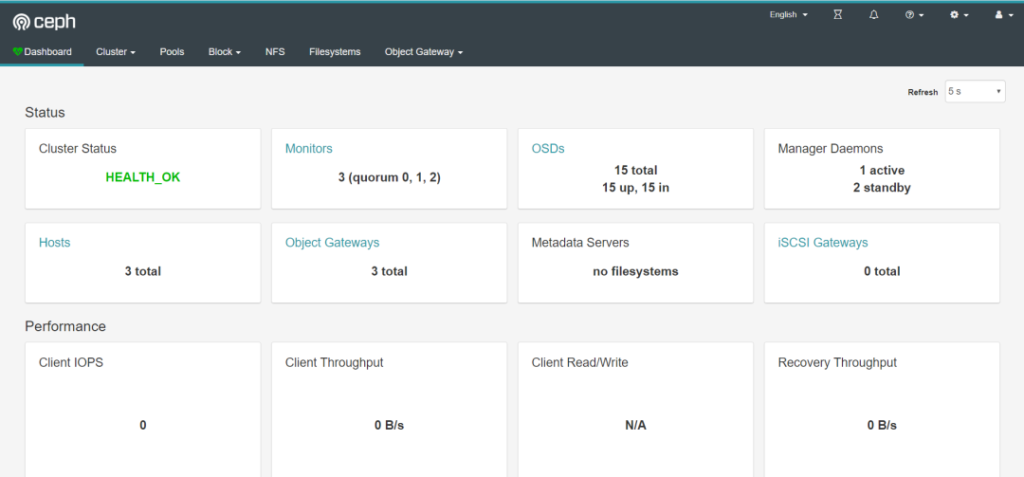

the new Ceph cluster by default has integrated a Dashboard as a module in the manager component. You only need to start the module, configure the address and port it monitors to, and use the default username and password prompted by the command line terminal to log in and access it.

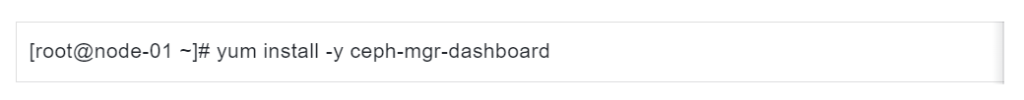

Install the corresponding software package on all MGR nodes in the cluster:

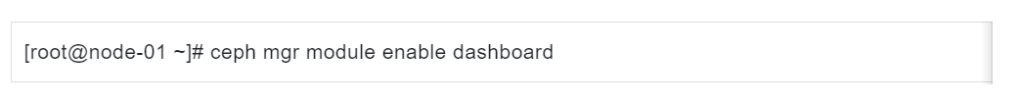

On any MON node in the cluster, enable the Dashboard module:

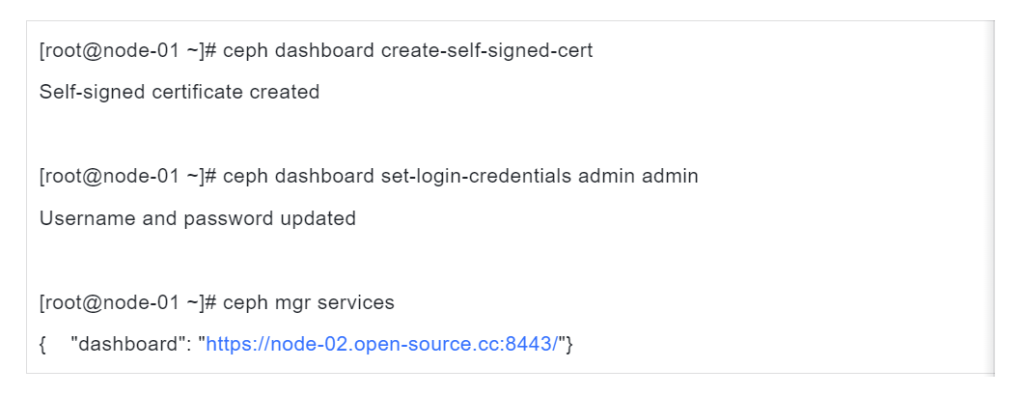

Configure the self-signed certificate and username and password on the Web.

Log in using the username and password as prompted. The interface is as follows:

05 Ceph Storage Connects to OpenStack Open Source Cloud Platform

OpenStack is an open source cloud computing management platform project jointly developed by NASA and Rackspace,Open Source authorized with Apache License . According to statistics, more than 75% enterprises use OpenStack to manage a large number of hardware resources in their data centers. Ceph is used as the back-end storage of OpenStack in the whole solution, which has the following advantages compared with traditional centralized storage:

- Compute nodes share storage to ensure robust instance running, rapid migration of instances between nodes can also be achieved.

- The unified platform provides multiple types of storage, reducing deployment complexity and workload, and flexibly expanding during runtime.

- The COW feature enables cloud platform creates instances in seconds

(1) Experimental environment and integration steps

Register https://switchicea.com/ or email to bd@cloudswit.ch, and send the keyword “Ceph” to obtain the complete the white paper “Ceph Storage Docking with OpenStack Open Source Cloud Platform”.

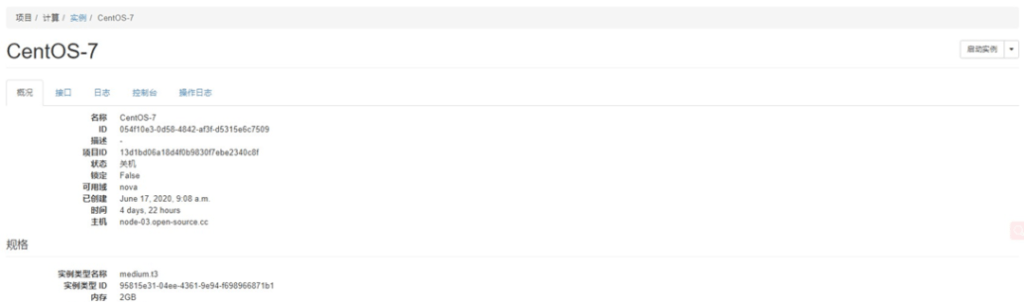

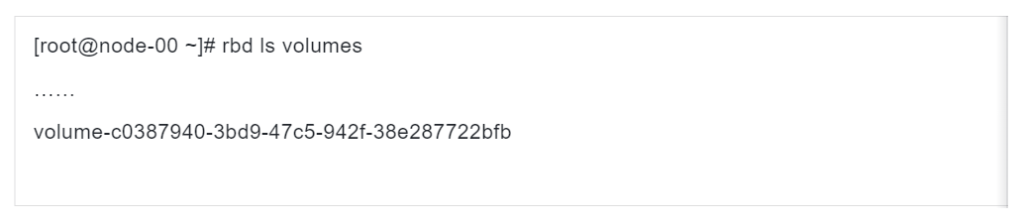

(2) Ceph Docking OpenStack Open Source Cloud Platform Results Validation

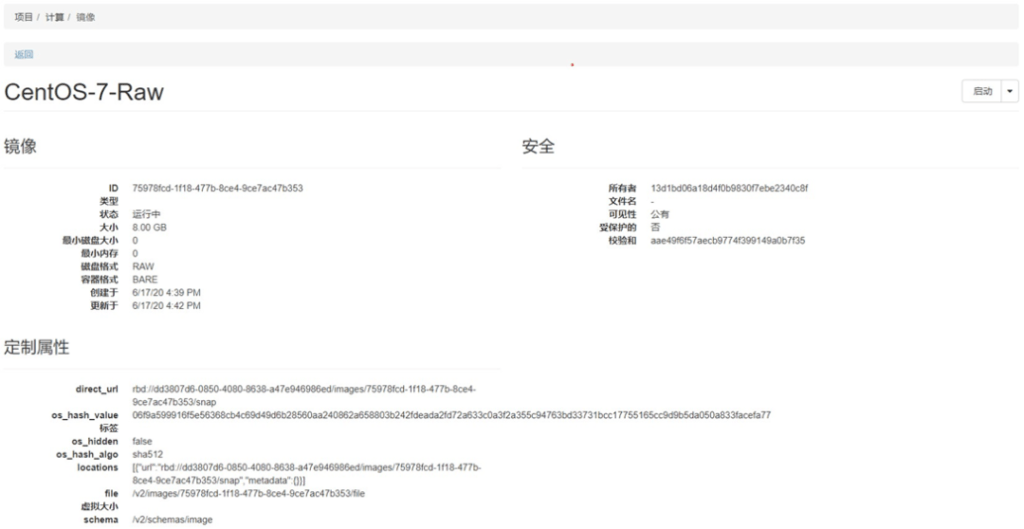

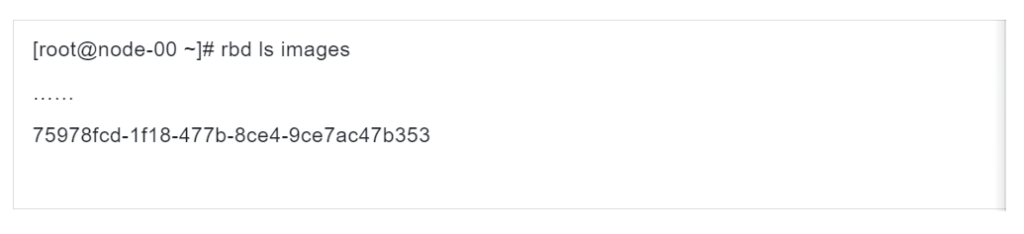

- Glance:

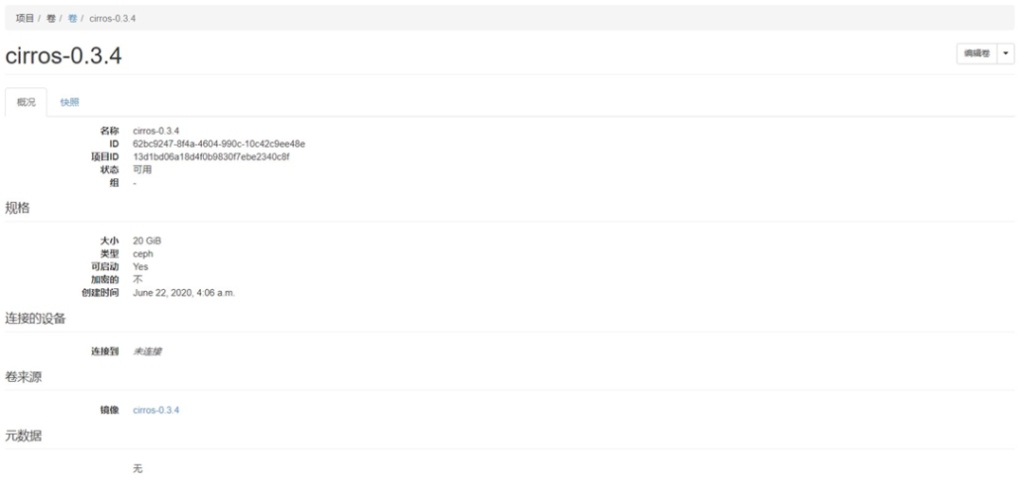

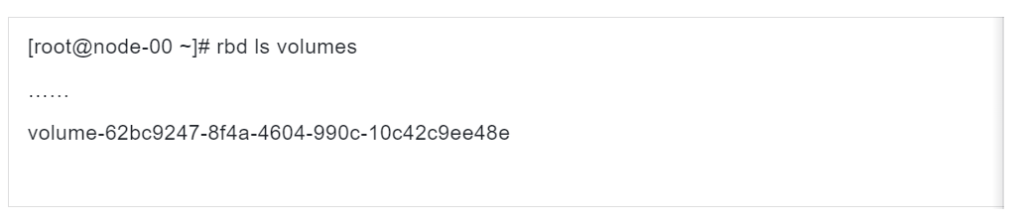

- Cinder:

- Nova:

Register https://switchicea.com/Asterfusion or email to bd@cloudswit.ch, and send the keyword “Ceph” to obtain the white paper “Ceph Storage Docking with OpenStack Open Source Cloud Platform”.

For more about Asterfusion Teralynx -based Ultra -low latency switch please visit: