Asterfusion’s Distributed Storage Network Solution Boosts Public Cloud for World’s Leading ISP

written by Asterfuison

Table of Contents

With the acceleration of digitization, the size of the cloud service market has been increasing in recent years. Big Data, NoSQL, AIGC, as well as Internet gaming, 3D rendering…all of the above require better IOPS and network latency performance in storage network.

China Telecom is a leading global ISP with more than 2 million public cloud customers. In the near future, Asterfusion low-latency Ethernet switches(CX664D-N)will be deployed at scale to provide a resilient and reliable network.

One Model of 200G Whitebox Switch Builds a Large-Scale Storage Network

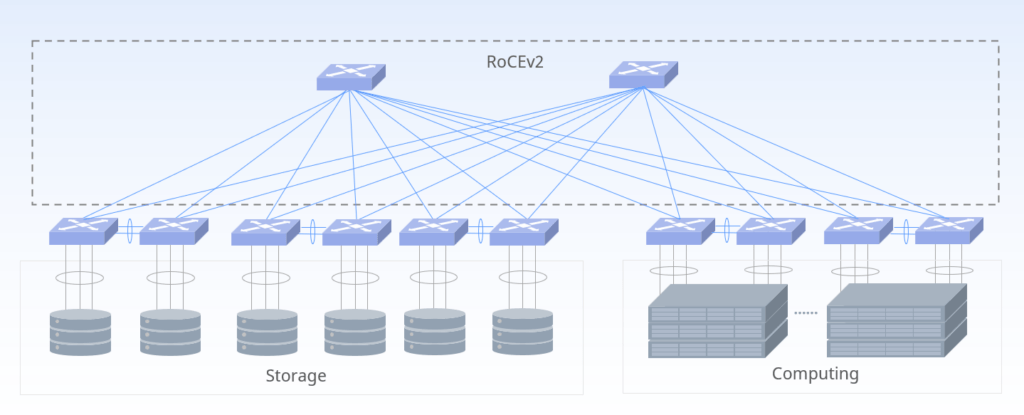

Based on standard CLOS, Asterfusion’s distributed storage network solution shortens the communication paths between servers, reducing latency and improving application and service performance. Besides, simple network architecture is easy for O&M.

The Asterfusion CX664P-N is data center switch designed for leaf/spine architecture with Enterprise SONiC pre-installed. It provides high density 200G/100G compatible ports for future network expansion, as well as two 10G ports for extended access.

CX664P-N offers low latency and boasts a line-rate L2/L3 up to 12.8Tbps switching performance with Marvell Teralynx ASIC, supports ROCEv2 and EVPN Multi-homing, and it stands out as an affordable and efficient open network switch for data center fabric.

What is RDMA & Why Packet Loss Rate is Essential?

Remote Direct Memory Access (RDMA) allows data to be transferred without the intervention of the CPU cache, which is especially suitable for SAN and other large-scale computing cluster networks like AI/ML/HPC. It is estimated that using RDMA instead of TCP/IP increases the I/O speed of SSD storage by about 50 times.

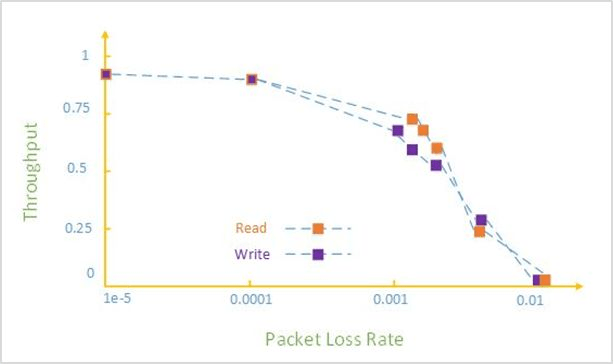

However, network packet loss has a huge impact on data transfer performance as shown in the figure: 0.1% packet loss will result in a sharp drop in RDMA throughput; 2% packet loss will cause throughput to drop to zero.

Since InfiniBand networks are very expensive, it is logical for vendors to consider less costly ways to transport RDMA traffic. RoCEv2 replacing InfiniBand with IPv4/IPv6 headers and UDP headers on the Ethernet link layer. IP and UDP improve network scalability, support networking across three layers, and support load balancing, but RoCEv2 still requires lossless networking for optimal performance.

What is NVMe-oF in Storage Networks?

NVMe (Non-Volatile Memory Express) is a protocol designed specifically for accessing storage devices, such as solid-state drives (SSDs), over PCIe. NVMe offers significantly faster data transfer speeds and lower latency compared to traditional storage protocols like SATA or SAS.

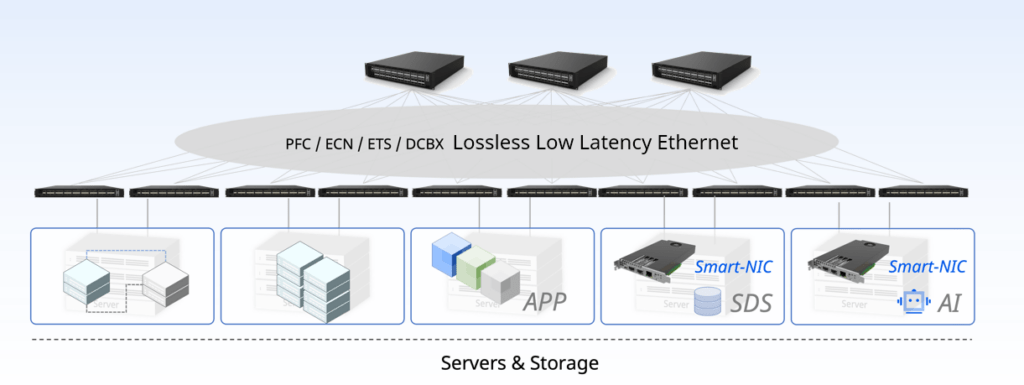

When NVMe and RoCEv2 are combined, they provide a highly performant and scalable solution for storage networks. This combination allows for direct access to NVMe storage devices, bypassing traditional storage protocols and network stack layers. As a result, it significantly reduces latency and improves throughput, enabling faster and more efficient data transfers.

Build Lossless Data Center Fabric with “Easy RoCE Configuration”

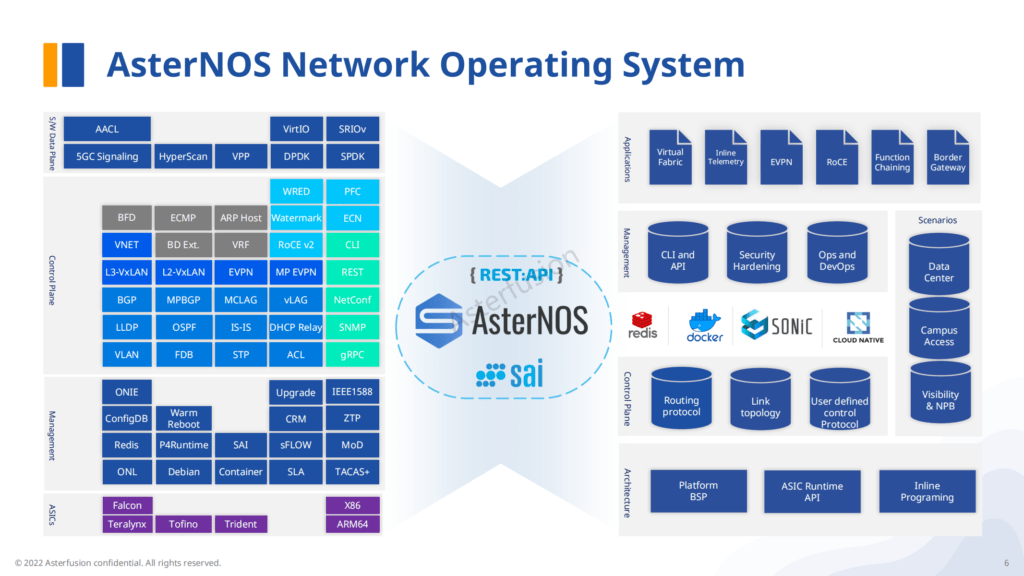

Equipped with the powerful SONiC distribution(AsterNOS), Asterfusion CX-N series switches support DCB protocol groups such as PFC, PFC WatchDog, ECN to reduce network packet loss and congestion. With a forwarding delay of at least 400ns per port, they ensure efficient data transmission. (Check out Test Results on distributed storage network between Asterfusion’s low latency switch & InfiniBand switch.)

Typically, O&M engineers need to modify the PFC and ECN separately to configure or cancel the lossless network. For reducing the complexity of O&M, AsterNOS provides encapsulated set of commands-“Easy RoCE Configuration”.

Not only in distributed storage networks, Asterfusion’s low-latency data center switches have also been deployed at scale in AIGC, HPC and other scenarios.

Please visit cloudswit.ch and mail to bd@cloudswit.ch for more.