On-site Test Result: Asterfusion RoCEv2-enabled SONiC Switches Vs. InfiniBand

written by Asterfuison

Table of Contents

In early deployments of AI and ML, NVIDIA’s InfiniBand networking solution was the preferred choice because of its excellent low network latency. Over time, however, networking vendors and hyperscalers are actively exploring Ethernet alternatives to cater to the demands of rapidly expanding AI workloads. Currently, RoCEv2 replacing InfiniBand to carry RDMA traffic has become a common solution in the industry. Let’s explore the following article!

What is InfiniBand?

InfiniBand is a network communication protocol that facilitates the movement of data and messages by creating a dedicated, protected channel directly between nodes through a switch. RDMA and send/receive offloads managed and performed by InfiniBand adapters. One end of the adapter connects to the CPU via PCIe, and the other end connects to the InfiniBand subnet via an InfiniBand network port. This provides significant advantages over other network communication protocols, including higher bandwidth, lower latency, and enhanced scalability.

Challenges of InfiniBand Network Deployments

- Vendor lock-in: Only one vendor has mature IB products & solutions, which are expensive.

- Compatibility: InfiniBand uses a unique protocol, not TCP/IP; Specialized network, separate cabling and switches

- Availability: IB switches generally have a long delivery time

- Service: O&M depends on the manufacturer, which makes it difficult to locate faults and takes a long time to solve problems

- Expansion and upgrading: Depends on the progress of the vendor’s product releases

What is RoCE and RoCEv2?

Among these alternatives, RDMA (Remote Direct Memory Access) emerges as a groundbreaking technology that enhances data throughput and reduces latency significantly. It initially deployed on the InfiniBand, but has now been successfully integrated into Ethernet infrastructure.

Currently, the prevailing solution for high-performance networks involves constructing an RDMA-supported network based on the RoCEv2 (RDMA over Converged Ethernet version 2) protocol. This innovative approach makes use of two crucial technologies, Priority Flow Control (PFC) and Explicit Congestion Notification (ECN). The utilization of this technology offers several advantages, including cost-effectiveness, impeccable scalability, and the elimination of vendor lock-in.

For more about ROCE please read: https://cloudswit.ch/blogs/roce-rdma-over-converged-ethernet-for-high-efficiency-network-performance/#what-is-ro-ce%EF%BC%9F

Next, we’ll take a look at how Asterfusion ROCEv2 low latency SONiC switches perform against InfiniBand switches or other Ethernet switches in AIGC, distributed storage and HPC network.

RoCEv2 Switches Test Result in AIGC Network

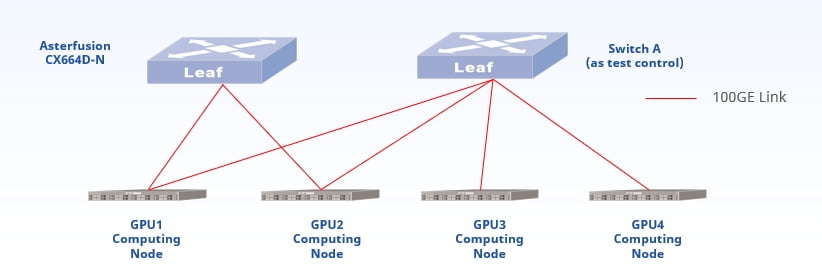

We built an AIGC network test environment using the Asterfusion CX664D-N Ultra Low Latency Switch and another brand RoCEv2 supported switch(Switch A) to compare NCCL performance.

NCCL is short for NVIDIA Collective Communications Library. It is an open source tool from NVIDIA for testing collection communication. It can be used to test whether the collection communication is normal, and to stress test the collection communication rate.

https://github.com/NVIDIA/nccl-tests

https://docs.nvidia.com/deeplearning/nccl/user-guide/docs/overview.html

| Item | Model | Specs | Quantity | Remark |

| Switch | AsterfusionCX664D-N (64-port 200G) Switch A (48-port 200G,as a test control) | \ | 11 | |

| Server | X86_64 | CPU: Intel Xeon Silver 4214Memory: 256G | 2 | Need 200G NIC and corresponding driver |

| Optical Module | 100G | QSFP28 | 4 | \ |

| Fiber | Multi-mode | \ | 2 | \ |

| NIC | \ | MT28800 Family CX-5 Lx | 2 | Switch to Ethernet Mode |

| OS | Ubuntu20.04 | \ | \ | Kernel version > 5.10 |

| Mellanox Driver | MLNX_OFED-5.0 | \ | \ | NIC driver adapted to host kernel |

| Switch OS | AsterNOS SONiC 201911.R0314P02 | \ | \ | \ |

| NCCL | V2.13 | \ | \ | \ |

| OpenMPI | V4.0.3 | \ | \ | \ |

NCCL-Test(Bandwidth & Latency)

Layer 2 network, RoCEv2 enabled on CX664P-N Switch, GPU server NICs optimized for RDMA.

| Bandwidth (GB/s) | GPU0 | GPU1 | GPU2 | GPU3 | GPU4 | GPU5 | GPU6 | GPU7 |

| Switch A GPU1-2 | 5.39 | 6.61 | 11.31 | 7.22 | 2.01 | 2.45 | 1.77 | 2.28 |

| Switch A GPU3-4 | 5.97 | 7.17 | 11.21 | 7.76 | 2.4 | 2.81 | 2.05 | 2.66 |

| Asterfusion GPU1-2 | 6.02 | 7.22 | 11.24 | 7.8 | 2.39 | 2.85 | 1.87 | 2.66 |

| Latency (μs) | GPU0 | GPU1 | GPU2 | GPU3 | GPU4 | GPU5 | GPU6 | GPU7 |

| Switch A GPU1-2 | 22.04 | 21.81 | 21.84 | 22.31 | 22.93 | 22.42 | 22.9 | 22.75 |

| Switch A GPU3-4 | 21.63 | 21.65 | 21.98 | 21.71 | 22.46 | 22.51 | 23.1 | 23.05 |

| Asterfusion GPU1-2 | 22.57 | 21.79 | 21.78 | 21.62 | 22.79 | 22.63 | 23.01 | 23.16 |

| Mellanox CX-5 (Direct connection) | CX664D-N | Switch A | |

| Latency (ns) | 1400ns | 480ns | 580ns |

| Bandwidth (Gb/s) | 99.4 | 98.14 | 98.14 |

More about Asterfusion AIGC Networking Solutions: Cost Effective AIGC Network Solution by Asterfusion ROCEv2 Ready Switch

Asterfusion RoCEv2 AIGC network Solution

Your AI/ML computing cluster, hungering for speed and reliability, fueled by the power of 800G/400G/200G Ethernet, RoCEv2 technology, and intelligent load balancing. This isn’t just any connection – it’s a high-performance, low-TCO network powerhouse, courtesy of Asterfusion. No matter the size of your cluster, we’ve got you covered with our one-stop solution.

For more about Asterfusion AI network solution : https://cloudswit.ch/solution/rocev2-ai-solution-with-dgx-superpod/

RoCEv2 Switches Test Result in HPC Network

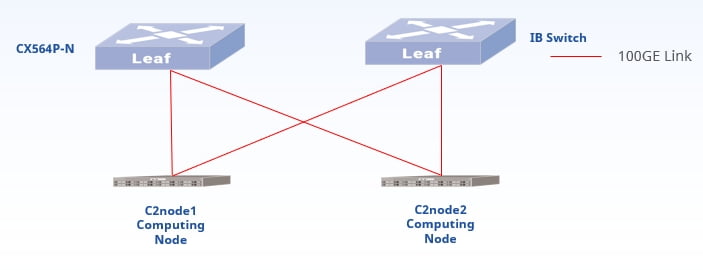

We build an HPC test network using an Asterfusion CX564P-N ultra-low latency switch and a Mellanox InifiBand switch to compare their performance.

| Item | Model | Specs | Quantity | Remark |

| Switch | AsterfusionCX564P-N(64-port 100G) | \ | 1 | |

| Server | X86_64 | CPU: Intel Xeon Gold 6348Memory: 512G | 2 | \ |

| Optical Module | 100G | QSFP28 | 6 | \ |

| Fiber | Multi-mode | \ | 3 | \ |

| NIC | MCX455A-ECA_AX | Mellanox CX-4 Lx | 6 | Switch to Ethernet Mode |

| OS | CentOS 7.9.2009 | \ | \ | Kernel Version > 3.10.0-957.el7 |

| Mellanox NIC Driver | MLNX_OFED-5.7 | \ | \ | \ |

| Switch OS | AsterNOS (SONiC201911.R0314P02) | \ | \ | \ |

| Kernel | 3.10.0-1160.el7.x86_64 | \ | \ | \ |

| WRF | V4.0 | \ | \ | \ |

Test Result

Layer 2 network, RoCEv2 enabled on CX532P-N, HPC node (Mellanox NICs) optimized for RDMA

E2E forwarding performance test

| E2E Latency | Switch Model | T1 | T2 | T3 | AVG |

| Asterfusion | CX564N-P | 1580ns | 1570ns | 1570ns | 1573.3ns |

| Mellanox | IB Switch | 1210ns | 1220ns | 1220ns | 1213.3ns |

HPC application performance test (WRF)

| WRF/s | T1 | T2 | T3 | AVG |

| Single Device 24 core | 1063.22 | 1066.62 | 1066.39 | 1065.41 |

| Dual Device 24 core NIC direct connection | 1106.95 | 1110.73 | 1107.31 | 1108.33 |

| Dual Device 24 core RoCE | 1117.21 | 1114.32 | 1114.34 | 1115.29 |

| Dual Device 24 core IB | 1108.35 | 1110.44 | 1110.55 | 1109.78 |

RoCEv2 Switches Test Result in Distributed Storage Network

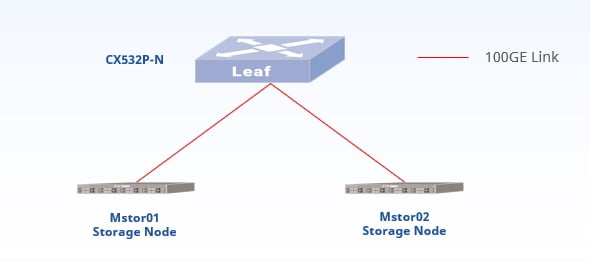

We built a storage network using Asterfusion CX532P-N ultra-low-latency switches and performed stress tests. The tests use FIO tools to complete 4K/1M read/write tests and record storage latency, bandwidth, IOPS, and other data.

| Item | Model | Specs | Quantity | Remark |

| Switch | AsterfusionCX532P-N | \ | 1 | 32-port 100G |

| Server | X86_64 | CPU: AMD EPYC 7402P*2 Memory: 256G | 2 | Need 100G NIC and corresponding driver |

| Optical Module | 100G | QSFP28 | 4 | \ |

| Fiber | Multi-mode | \ | 2 | \ |

| NIC | MCX455A-ECA_AX | Mellanox CX-4 Lx | 2 | Switch to Ethernet Mode |

| OS | CentOS 7.9.2009 | \ | \ | Kernel version > 3.10.0-957.el7 |

| Mellanox Driver | MLNX_OFED-5.7 | \ | \ | \ |

| Switch OS | SONiC201911.R0314P02 | \ | \ | \ |

| FIO | V3.19 | \ | \ | \ |

| Kernel | 3.10.0-1160.el7.x86_64 | \ | \ | \ |

Test Result

| 1M RandRead | 1M RandWrite | 1M SeqRead | 1M SeqWrite | |

| Bandwidth | 20.4GB/s | 6475MB/s | 14.6GB/s | 6455MB/s |

| IOPS | 20.9K | 6465 | 20.2K | 6470 |

| Latency | 3060.02us | 9881.59us | 4249.79us | 9912.59us |

| 4K RandRead | 4K RandWrite | 4K SeqRead | 4K SeqWrite | |

| Bandwidth | 3110MB/s | 365MB/s | 656MB/s | 376MB/s |

| IOPS | 775K | 101K | 173K | 105K |

| Latency | 79.59us | 683.87us | 380.54us | 664.06us |

More about Asterfusion Distributed Storage Network Solutions(NetAPP MetroCluster IP Compatible):https://cloudswit.ch/blogs/boost-netapp-metrocluster-ip-storage-with-asterfusion-data-center-switch/

Conclusion: Asterfusion RoCEv2 low latency SONiC switches are fully capable of replacing IB switches

Asterfusion low latency network solutions offer the following advantages:

- Low cost: Less than a half of the cost of an IB switch

- Availability: Asterfusion has hundreds of low-latency switches with a lead time of 2-4 weeks.

- After-sales service: Professional, patient, reliable team, to provide 24-hour remote online technical support.

- Expansion and upgrading: Based on AsterNOS (Asterfusion enterprise ready SONiC) to support flexible functionality expansion and online upgrade

We have summarised more test data from our customers in different industries, so if you are interested, please visit our website and download it yourself. https://help.cloudswit.ch/portal/en/home