Table of Contents

Recently, an Asterfusion’s client from the financial industry, hopes to simulate and respond to risks in real time,In order to realize the improvement of enterprise financial risk management capabilities. In fact, no matter in financial industry or other industries, in order to best meet the needs of customers in a digital world, it must be able to process large amounts of data faster than standard computers .Thus, High-performance computing (HPC) solutions are being favored by enterprises. In this article, let’s make a comparison of Asterfusion Low latency Switch versus InfiniBand switch in the HPC scenario.

What is HPC (High-Performance Computing)

High-performance computing (HPC) is the capability of processing data and performing complex calculations at extremely high speeds. For example, a laptop or desktop computer with a 3 GHz processor can perform about 3 billion calculations per second, which is much faster than any human being can achieves, but it still pales in comparison to HPC solutions that can perform trillions of calculations per second.

The general architecture of HPC is mainly composed of computing, storage, and network. The reason why HPC can improve the computing speed is that it adopts “parallel technology”, using multiple computers to work together, using ten, hundreds, or even thousands of computers, which enables them “working in parallel”. Each computer needs to communicate with each other and process tasks cooperatively, which requires a high-speed network with strict requirements on latency and bandwidth.

The RDMA mode with high bandwidth, low latency, and low resource utilization (the main architecture: InfiniBand and Ethernet protocols) is often the best choice for HPC networks.

Asterfusion’s CX-N Low Latency Switch Versus InfiniBand switch

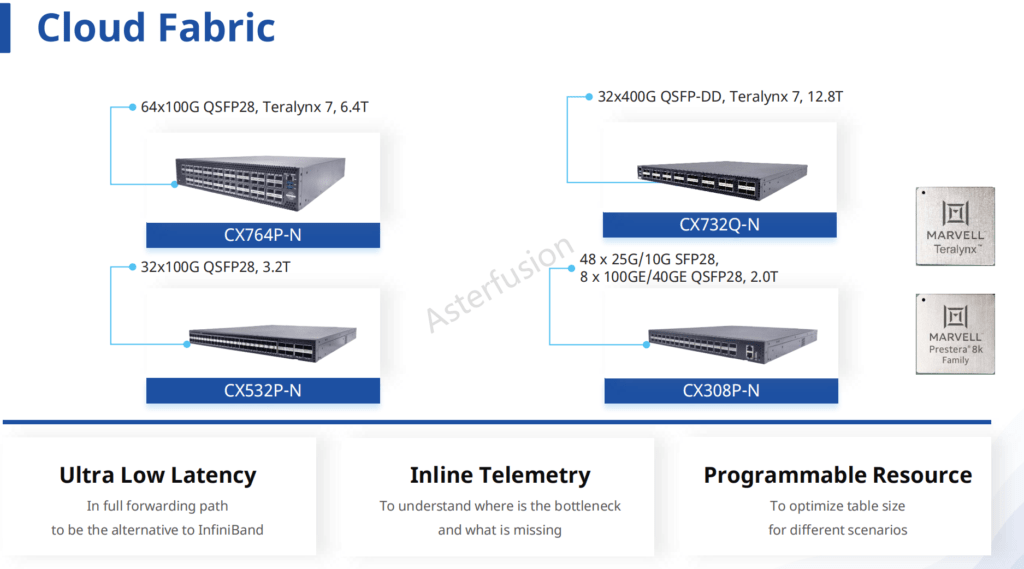

Asterfusion CX-N ultra-low latency switch (CX-N for abbreviation ) adopts standard Ethernet protocol and open software & hardware technology. It supports lossless Ethernet and network lossless anti-congestion technology, which fully meets the high requirements of users for network bandwidth and latency in HPC applications.

To verify this fact, we selected Mellanox’s InfiniBand switch and conducted a comparative test of its operating speed under the same HPC application. We conducted E2E forwarding tests, MPI benchmark tests and HPC application tests on the networks built by Asterfusion CX-N switches and Mellanox MSB7800 switches (IB switches for short).

Lower-Cost Alternative to InfiniBand Switch, Asterfusion Low Latency Switch

The results show that the latency peformance of Asterfusion CX-N reaches the same level compare to competator ,and the running speed is only about 3% lower than the competing product, which could satisfy the majority of HPC application scenarios.What needs to be highlighted is that Asterfusion pays more attention to the control of product cost, and that Asterfusion’s HPC solution has significant advantages in terms of Cost performance. (Click for CX-N cloud switches’ quotation bd@cloudswit.ch)

The Whole Process of the HPC Scenario Test

1. Target and Physical Network Topology

- E2E Forwarding Test

Test the E2E (End to End) forwarding latency and bandwidth of the two switches under the same topology. The test point uses the Mellanox IB packet sending tool to send packets. The test process traverses 2~8388608 bytes.

- MPI Benchmark

The MPI benchmark is often used to evaluate high performance computing performance. This program test point uses OSU Micro-Benchmarks to evaluate the performance of the CX-N cloud switches and IB switches.

- HPC Application Testing

This test scenario runs the same task in each HPC application and compares the operating speed of the CX-N and IB switches (which one takes less time)

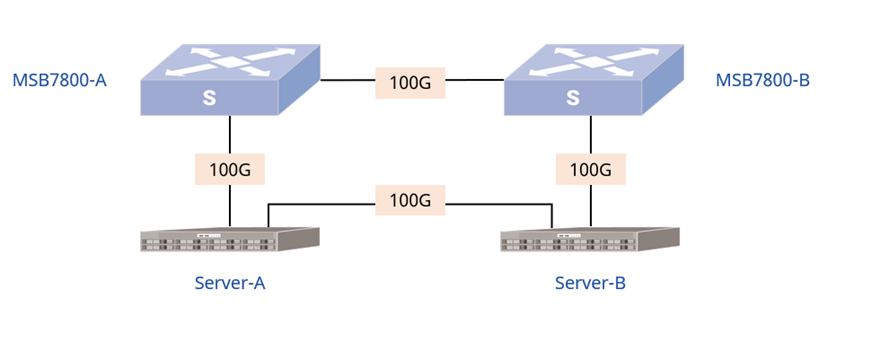

1.1 IB Switch Physical Topology

The physical topology of the IB switch of the above solution is shown in Figure 1:

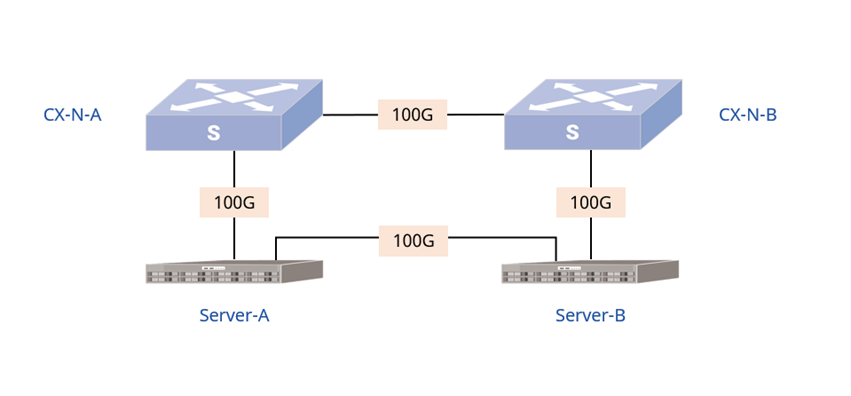

1.2 CX-N Physical Topology

The CX-N physical topology of the above solution is shown in Figure 2:

1.3 Management Network Port IP Planning

The IP addresses of the devices, interfaces, and management network ports involved in the deployment are shown in Table 1 below:

| Equipment Model | Interface | IP Address | Comment |

| CX-N-A | Management port | 192.168.5.108 | |

| CX-N-B | Management port | 192.168.5.109 | |

| MSB7800-A | Management port | 192.168.4.200 | |

| MSB7800-B | Management port | 192.168.4.201 | |

| Server-A | Management port | 192.168.4.144 | |

| Server-B | Management port | 192.168.4.145 |

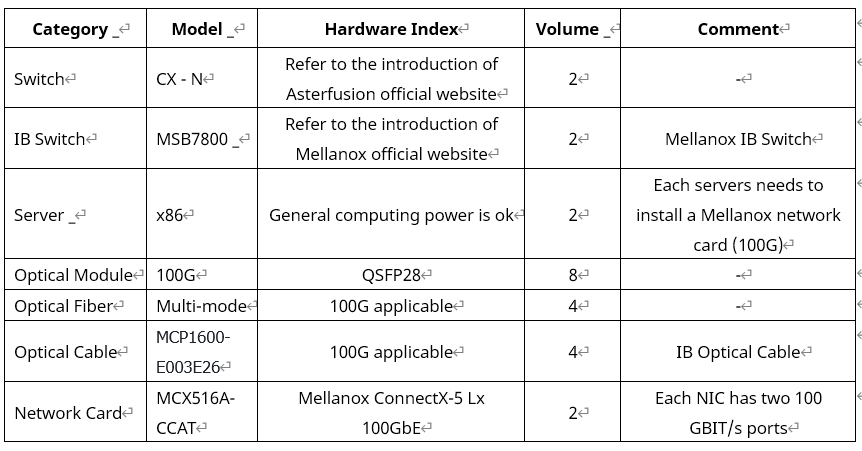

2.The hardware and software involved in the deployment environment are shown in following tables:

| Software | Version | Comment |

| Host Machine Operating System | CentOS Linux 7.8.2003 | – |

| Host Machine kernel | 3.10.0-1127.18.2.el7 | – |

| Mellanox Network Driver | 5.0-1.0.0.0 | The NIC driver version must match the host kernel version |

| WRF | davegill/wrf-coop:fourteenthtry | https://github.com/wrf-model/WRF.git |

| LAMMPS | LAMMPS (3 Mar 2020) | https://github.com/lammps/lammps/ |

| OSU MPI Benchmarks | 5.6.3 | http://mvapich.cse.ohio-state.edu |

3.Test Environment Deployment

This section describes how to install the basic environment for deploying the HPC in the three test scenarios on the two servers.

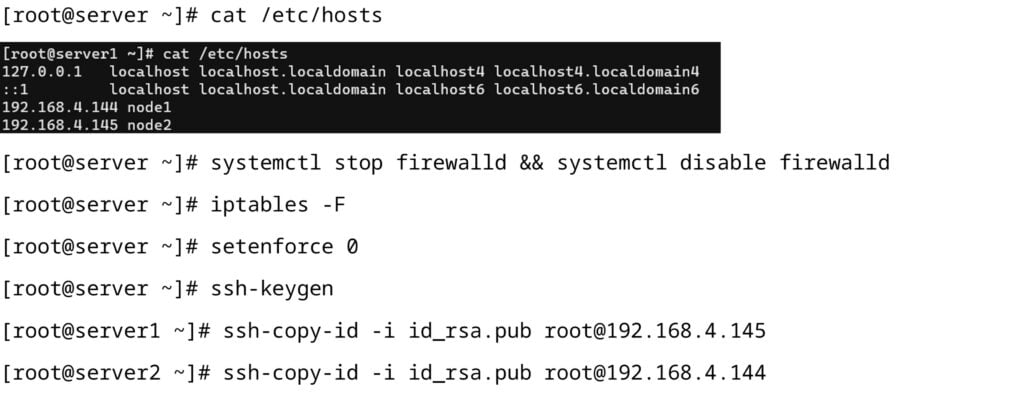

Note: A command starting with “[root@server ~]#” indicates that both servers need to be executed

3.1 E2E Forwarding Test Environment Deployment

Install the MLNX_OFED driver of the Mellanox network card on the two servers. After the network card driver is installed, check the network card and driver status to ensure that the network card can be used normally.

- NIC MLNX_OFED driver installation:

- Check the NIC and NIC driver status:

3.2 MPI Benchmark Environment Deployment

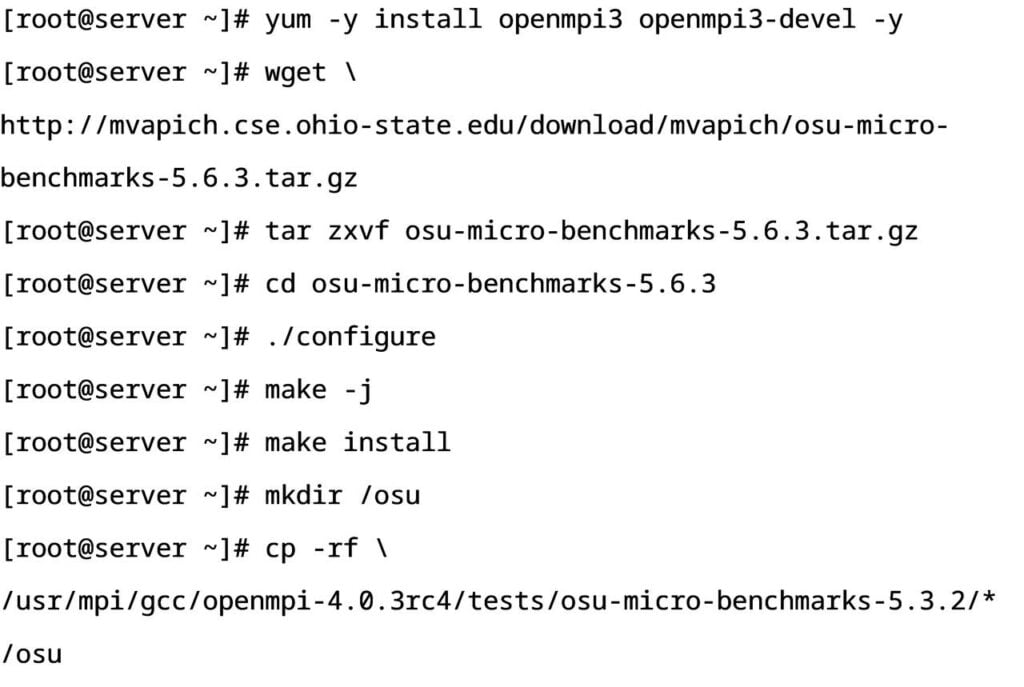

Install the HPC high-performance cluster basic environment on two servers, install the OSU MPI Benchmarks MPI communication efficiency evaluation tool. The test mode is divided into two modes: point-to-point communication and networking communication. By executing various modes of MPI, the bandwidth and delay are tested.

HPC Cluster High-performance Basic Environment:

OSU MPI Benchamarks Tools installation

3.3 HPC Application Test Environment Deployment

Install the HPC test application on two servers. This program deploys WRF open source weather simulation software and LAMMPS atomic and molecular parallel simulator for data testing.

WRF Installation and Deployment:

WRF stands for Weather Research and Forecasting Model, which is a software for weather research and forecasting model.

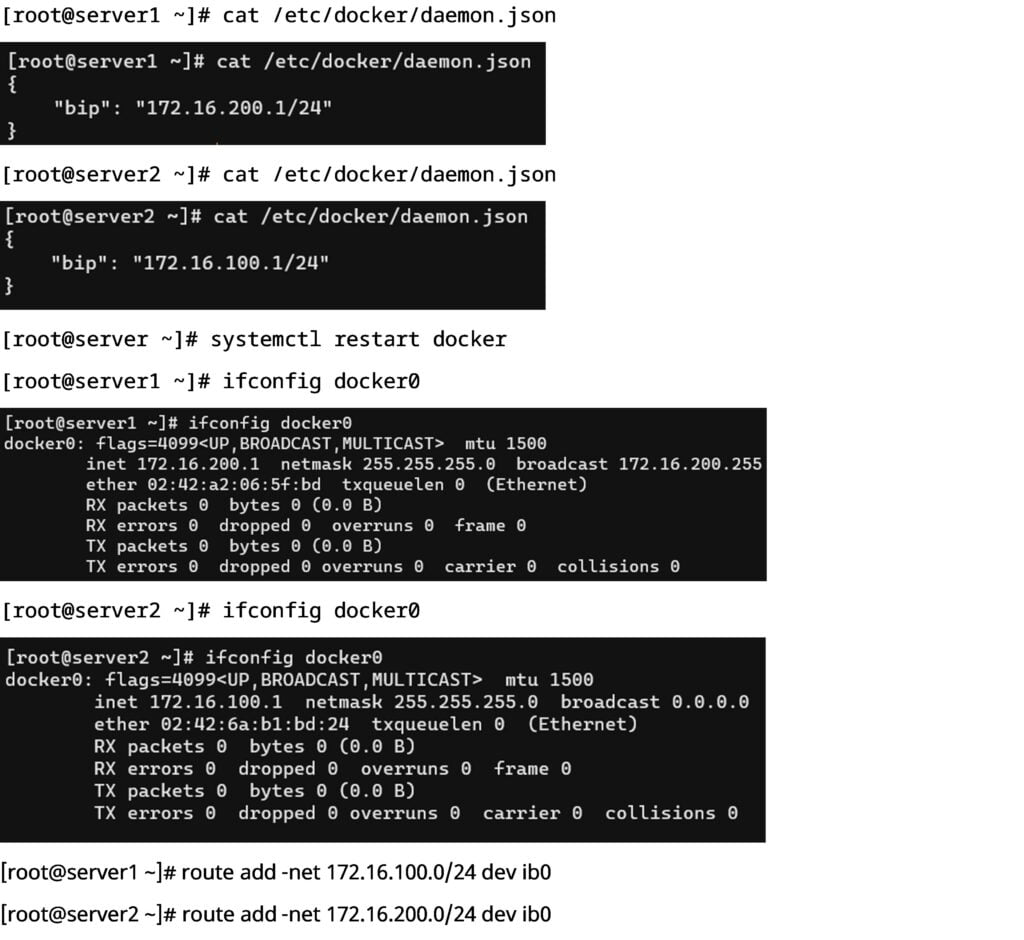

- Modify Docker Network Configuration

In this solution, two WRF servers are deployed using Docker containers. Docker configuration files need to be modified to bind the virtual network bridge to the Mellanox network adapter, and cross-host Docker container communication can be implemented through direct routing.

- WRF Application Deployment

LAMMPS Installation and Deployment:

LAMMPS is Large-scale Atomic/Molecular Massively Parallel Simulator, a large-scale atomic and molecular parallel simulator, which is mainly used for some calculations and simulations related to molecular dynamics.

- Install GCC-7.3

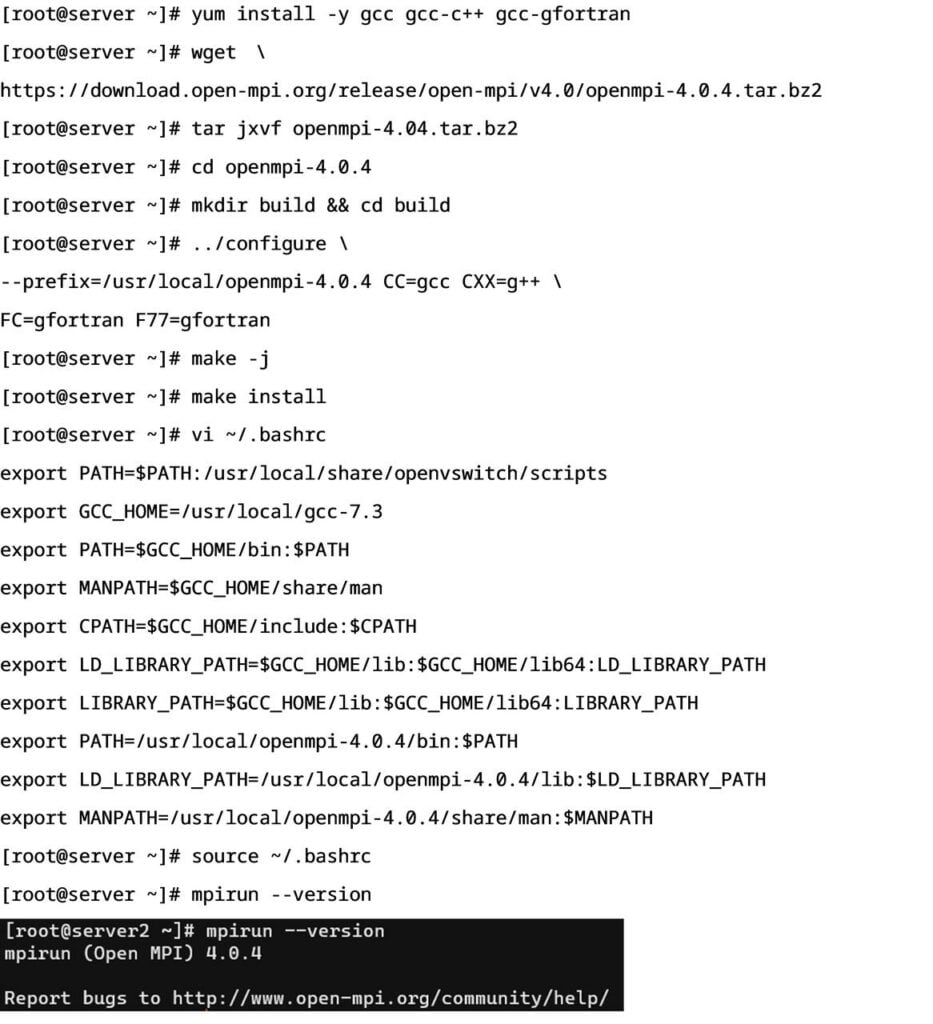

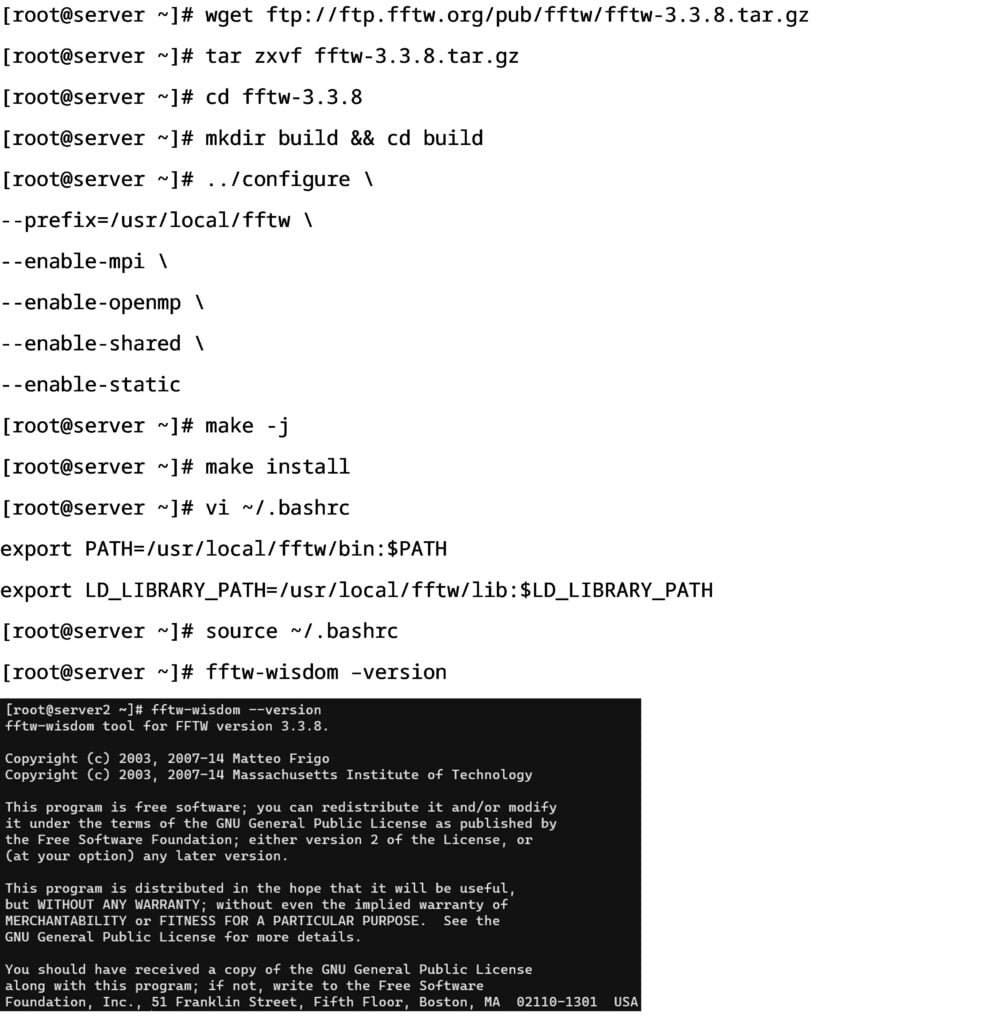

- Install OpenMPI

- Install FFTW

- Install LAMMPS

Please contact bd@cloudswit.ch for the full whitepaper

Lower-cost Aternative to InfiniBand Switch, Asterfusion Low latency Switch

With the maturity of cloud computing technology, HPC is changing from being applied to large-scale scientific computing scenarios to being applied to various commercial computing scenarios.

Asterfusion CX-N low latency cloud switches could satisfy the majority of HPC application scenarios while has significant advantages in terms of cost performance.

Related Products

-

64-Port 200G QSFP56 Low Latency Data Center Switch, Enterprise SONiC Ready, Marvell Teralynx: CX664D-N

64-Port 200G QSFP56 Low Latency Data Center Switch, Enterprise SONiC Ready, Marvell Teralynx: CX664D-N -

32-Port 400G QSFP-DD Low Latency AI/ML/Data Center Switch, Enterprise SONiC Ready,CX732Q-N

32-Port 400G QSFP-DD Low Latency AI/ML/Data Center Switch, Enterprise SONiC Ready,CX732Q-N -

48-Port 25G Data Center Leaf (TOR) Switch with 8x100G Uplinks, SONiC Enterprise Ready, Marvell Falcon: CX308P-48Y-N

48-Port 25G Data Center Leaf (TOR) Switch with 8x100G Uplinks, SONiC Enterprise Ready, Marvell Falcon: CX308P-48Y-N -

32-Port QSFP28 Low Latency Data Center Switch, Enterprise SONiC Ready, Marvell Teralynx: CX532P-N

32-Port QSFP28 Low Latency Data Center Switch, Enterprise SONiC Ready, Marvell Teralynx: CX532P-N