Asterfusion Low Latency Cloud Switch : A Cost -Effective Alternative to InfiniBand

written by Asterfuison

With the increased use of new technologies like the Internet of Things(IoT), artificial intelligence (AI), and machine learning (ML),etc,it can be predicted that in the next two or three decades, we will enter a smart society based on the Internet of Everything(IoE). Now,the computing power of the data center becomes the new productivity. In order to best meet the needs of customers in a digital world, it must be able to process large amounts of data faster than standard computers. Thus,High-performance computing (HPC) solutions are being favored by enterprises.

What is HPC (High-Performance Computing)

High-performance computing is the capability of processing data and performing complex calculations at extremely high speeds. For example, a laptop or desktop computer with a 3 GHz processor can perform about 3 billion calculations per second, which is much faster than any human being can achieves, but it still pales in comparison to HPC solutions that can perform trillions of calculations per second. The general architecture of HPC is mainly composed of computing, storage, and network. The reason why HPC can improve the computing speed is that it adopts “parallel technology”, using multiple computers to work together, using ten, hundreds, or even thousands of computers, which enables them “working in parallel”. Each computer needs to communicate with each other and process tasks cooperatively, which requires a high-speed network with strict requirements on latency and bandwidth.

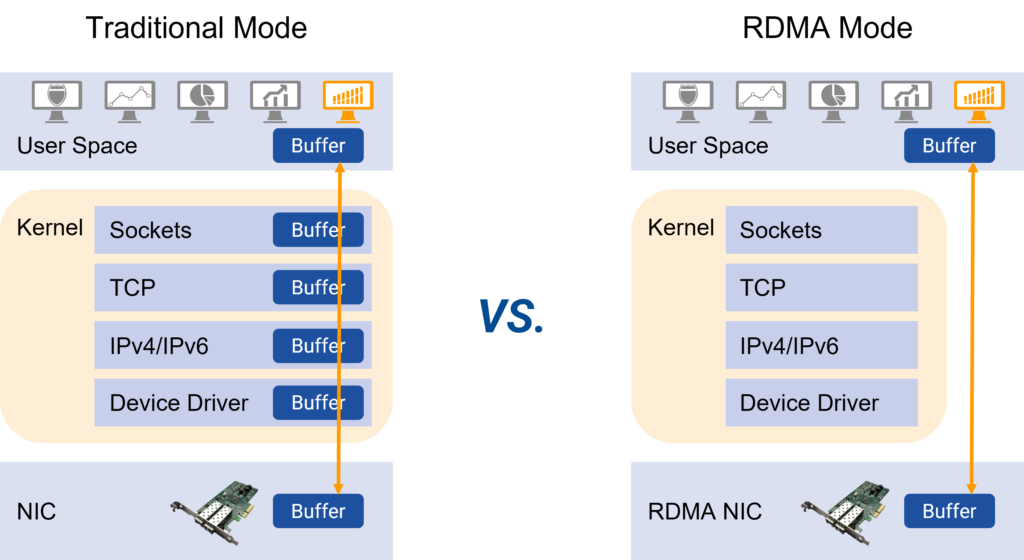

High bandwidth and low latency have become the most importans factors in HPC scenarios. As a result, RDMA is generally used to replace TCP protocol to reduce latency and server CPU occupancy.

What is RDMA(Remote Direct Memory Access)

RDMA (Remote Direct Memory Access) is developed to solve the delay of server-side data processing in network transmission. It can directly access the memory of one host or server from the memory of another host or server without using the CPU. It frees up the CPU to do what it’s supposed to do, such as running applications and processing large amounts of data. This both increases bandwidth and reduces latency, jitter, and CPU consumption.

For more about RDMA and ROCE: https://switchicea.com/blogs/roce-rdma-over-converged-ethernet-for-high-efficiency-network-performance/

- InfiniBand is a network protocol specifically designed for RDMA, which guarantees network lossless from the hardware level, with extremely high throughput and low latency. However, InfiniBand switches are dedicated products provided by specific manufacturers and use proprietary protocols, while most existing networks use IP Ethernet network , interoperability requirements cannot be satisfied with InfiniBand. Meanwhile, the closed architecture also has the problem of vendor lock-in, which is especially risky for business systems that require large-scale expansion in the future.

- iWarp protocol, allowing RDMA on TCP, requiring a special network card that supports iWarp, so that RDMA can be supported on standard Ethernet switches. However, due to the limitation of the TCP protocol, It loses the advantage of RDMA performance.

Compared with the above two, RoCE (RDMA over Converged Ethernet) allows applications to achieve remote memory access through Ethernet, supports RDMA on standard Ethernet switches, only requires a special network card supporting RoCE, and has no special requirements on the network hardware.

But the RDMA protocol is very sensitive to network packet loss, so the question lies in: how to build a lossless Ethernet that carries RDMA applications?

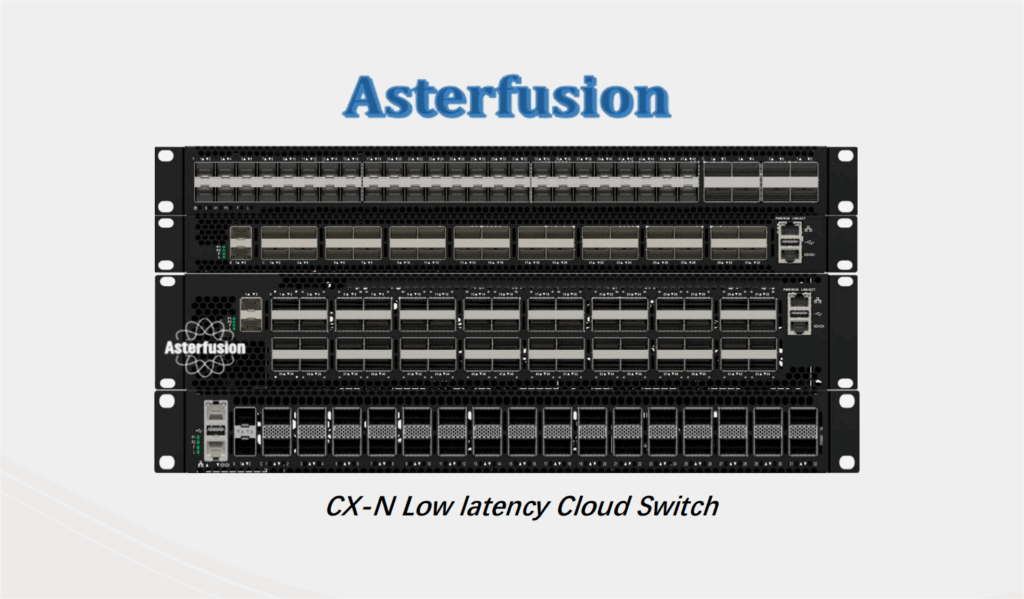

Asterfusion CX-N low latency switches in HPC Scenario

Based on the understanding of high-performance computing network requirements and RDMA technology, Asterfusion launched the CX-N series of ultra-low latency cloud switches. It uses a fully open, high-performance network hardware + transparent open network system (AsterNOS), to build a low latency, zero packet loss, high-performance Ethernet network for HPC scenarios, which not bound by any vendor.

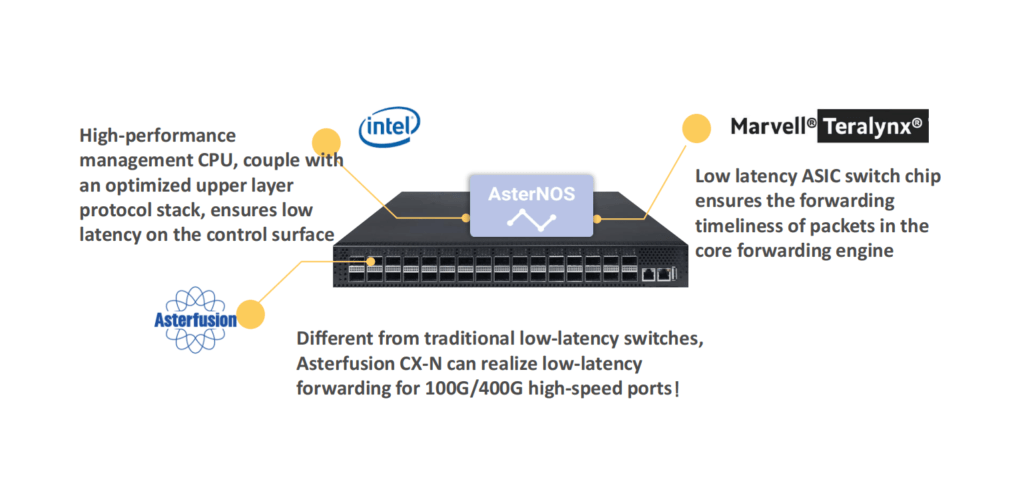

01.Ultra-low-latency switching ASIC, Reducing Network Forwarding delay

Extremely cost-effective, CX-N switches have port to port minimum 400ns forwarding delay,the forwarding delay is the same at full rate (10G~400G);

- Supports RoCEv2 to reduce the delay of transmission protocol;

- Support DCB, ECN, DCTCP,etc. to deliver low-latency, zero packet loss, non-blocking Ethernet;

- AFC SDN Controller provides unified management, which can seamless integration into OpenStack based cloud OS or standalone deployment turning clusters of switches into a single virtual fabric.

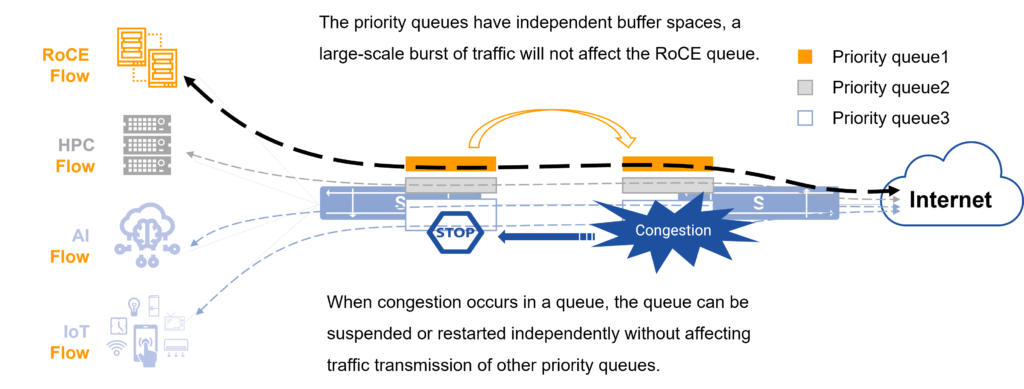

02.Provides lossless networking using PFC high-priority queues

As an enhancement of the pause mechanism, PFC allows 8 virtual channels to be created on an Ethernet link, assigning a priority level to each virtual channel and allocate dedicated resources (such as cache, queue, etc.), allowing individual to suspend and restart any one of the virtual channels without affecting other virtual channels’ traffic transmission .This approach enables the network to create a lossless service for a single virtual link and to coexist with other traffic types on the same interface.

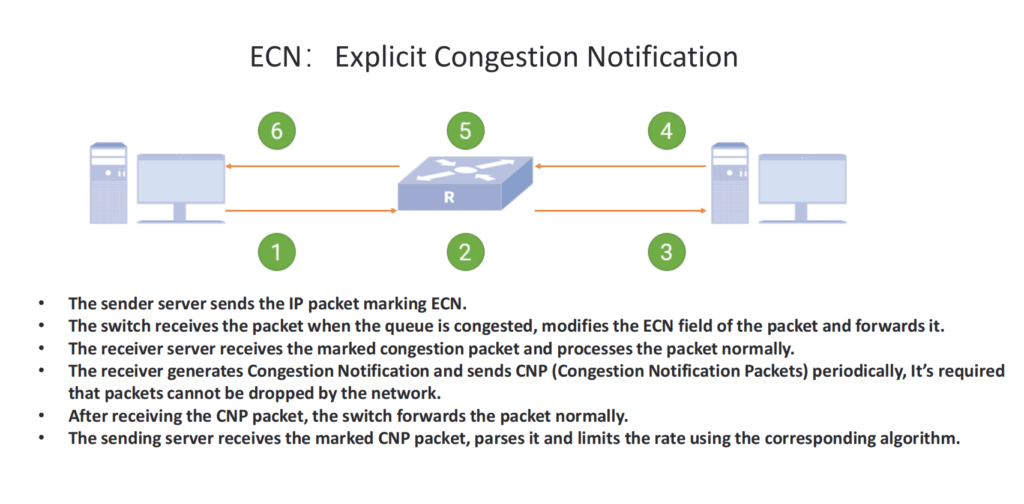

03. Use ECN to eliminate network congestion

ECN (Exploration Congress Notification) is an important means of building a lossless Ethernet that provides end-to-end flow control. Using the ECN feature, once congestion is detected, the network device marks the ECN domain at the IP head of the packet. When ECN-marked packets arrive at their intended destination, congestion notifications are fed back to the traffic sending side. The traffic sender then responds to the congestion notification by limiting the rate of the problematic network packets, thus reducing network latency and jitter of high performance computing clusters.

04. Cooperate with AFC SDN controller to ensure network is foolproof

Asterfusion follows the design concept of SDN, fully embraces the strategy of fully open network and high-performance cloud data center, and launched the AFC SDN cloud network controller, which realizes the visualization of network management. AFC displays data such as device status, link status, and alarm information in the form of graphs classified by time, resource, and performance type. In addion,it supports the statistical capabilities of multiple data to provide customers with a comprehensive and intuitive understanding of the overall network conditions.

Asterfusion CX-N Ultra Low Latency Switch vs. InfiniBand Switch

01 Lab Test

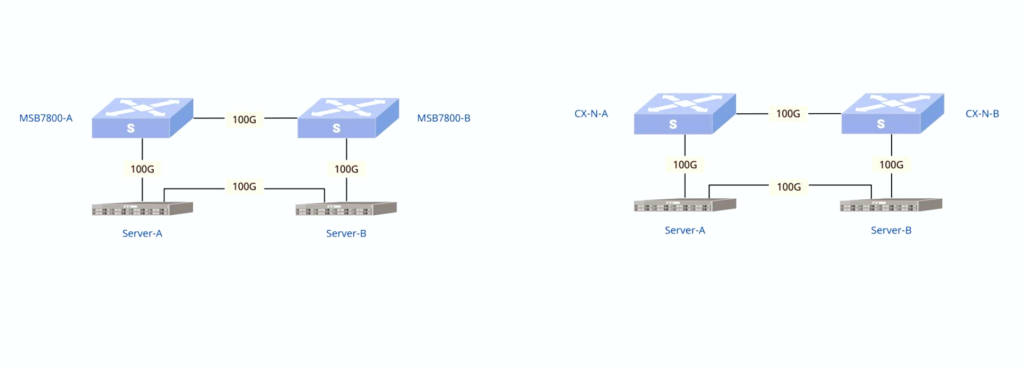

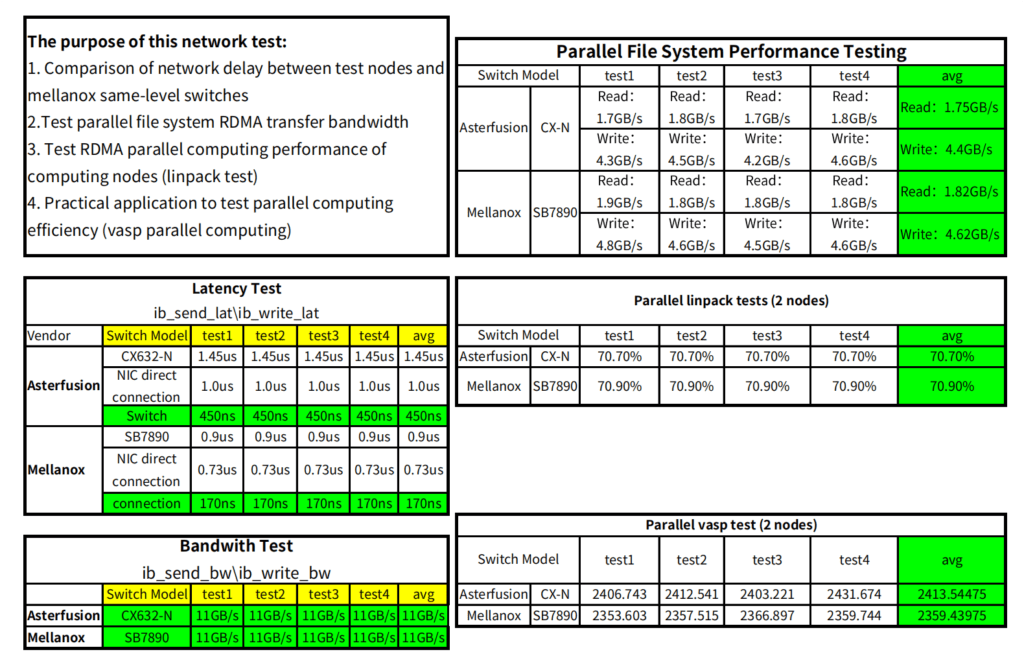

This test was conducted on the network built by CX-N ultra-low latency cloud switch (CX-N for short) and Mellanox MSB7800 (IB for short) switch.

For test details, please check previous posts:https://switchicea.com/blogs/asterfusion-low-latency-switch-vesus-infiniband-switchwho-win/

Test 1: E2E forwarding

Test E2E (End to End) forwarding latency and bandwidth of the two switches under the same topology.

This scheme uses Mellanox IB packet delivery tool to send packets, and the test process traverses 2~8388608 bytes.

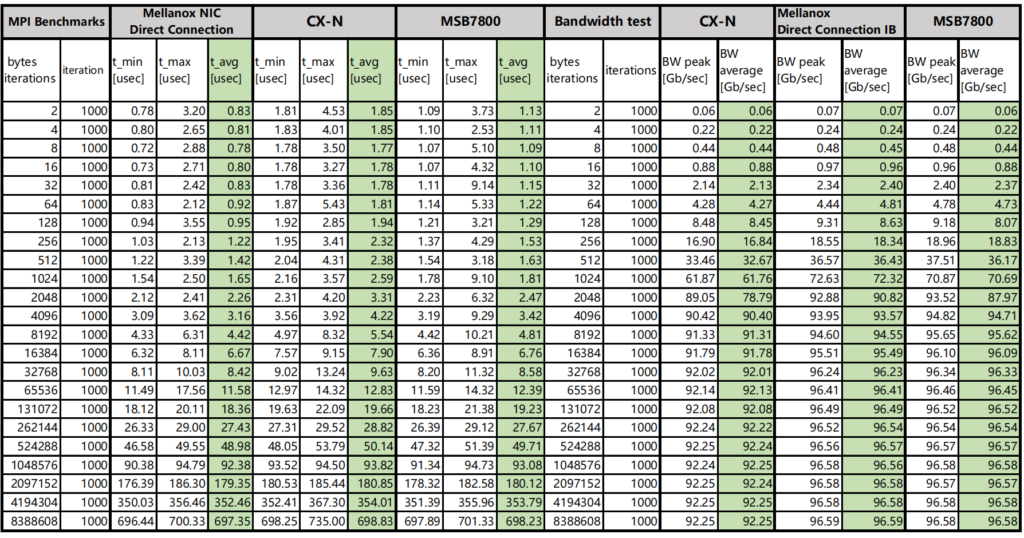

The CX-N switch has a bandwidth of 92.25Gb/s and a single delay of 480ns.

The bandwidth of the IB switch is 96.58Gb/s, and the delay of a single unit is 150ns.

Comparing Asterfusion CX-N low latency switch and Mellanox IB , the CX-N is more cost-effective.

In addition, the CX-N ‘s delay fluctuation of traversing all bytes is smaller, and the test data is stable at about 0.1us.

Test 2 (MPI benchmark)

MPI benchmarks are commonly used to evaluate high performance computing ‘s performance.

OSU Micro-Benchmarks are used to evaluate the performance of Asterfusion CX-N and IB switches.

The CX-N switch has a bandwidth of 92.63Gb/s and a single delay of 480ns.

The bandwidth of the IB switch is 96.84Gb/s, and the delay of a single unit is 150ns.

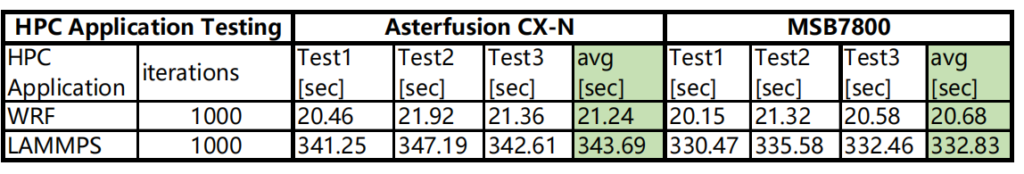

Test 3: HPC Applications

Run the same tasks in each HPC application and compare the speed of the CX-N and IB switches.

Conclusion: The operating speed of CX-N switches is only about 3% lower than that of IB switches.

02 Comparison of customer field test data

It is basically consistent with the results obtained in the lab, and even better.

We can draw a result:

Asterfusion low latency data center switch is A lower cost alternative to InfiniBand.

Conclusion:

The ultra-low-latency lossless Ethernet built by Asterfusion CX-N ultra-low-latency cloud switches,using traditional Ethernet achieves the performance of expensive InfiniBand switches.

When enterprise users have a limited budget, but at the same time have a high demand for latency in HPC scenarios, which can choose Asterfusion Teralynx based CX-N low latency switches as a chioce. It offers a truly low-latency, zero-packet-loss, high-performance, and cost-effective network for high-performance computing clusters.

For more information about Asterfusion CX-N low latency switch, please contact bd@cloudswit.ch.